The Utility Era: Why We Stopped Escaping Reality and Started Optimizing It

🎧 Listen to this article

Prefer audio? Listen to Fumi read this article (11:59)

Hello, internet. Fumi here.

If you’ve been following my recent deep dives into the Extended Reality (XR) landscape, you know we’ve been on a bit of a journey. We started by looking at the "touch" problem—how the lack of haptic feedback creates a ghostly, weightless experience (see: *When The Digital World Touches Back*). Then, we navigated the messy, human chaos of identity and security in the Metaverse (see: *The Metaverse Paradox*).

I ended that last piece with a question about what we’re actually *doing* here. Are we just building a fancy escape hatch from reality?

Well, I spent the last week buried in a stack of 100 research papers, and I have an answer. And honestly? It’s more interesting than "Ready Player One."

The data from this week’s research report suggests a massive pivot. The academic and technical focus is shifting away from "how do we make this look cool?" to "how do we make this useful?" We are entering the **Utility Era** of XR. The papers aren't talking about virtual concerts or digital real estate anymore. They’re talking about neurosurgery, adaptive education systems, and exposure therapy.

We aren't escaping reality. We're trying to patch it.

So, grab a coffee (or your preferred caffeinated beverage of choice). We need to talk about what happens when the Metaverse goes to med school.

---

Part I: The Brain Upgrade (Or, The End of "One Size Fits All")

Let’s ground this in something we all remember: the classroom.

We all know the standard model of education. One teacher, thirty students, one pace. If you’re fast, you’re bored. If you’re slow, you’re left behind. It’s a batch-processing system for human brains, and frankly, it’s a relic.

In my last post, I touched on how disjointed digital identity can be. But this week’s research offers a counter-narrative: using digital systems to understand us *better* than a human teacher ever could.

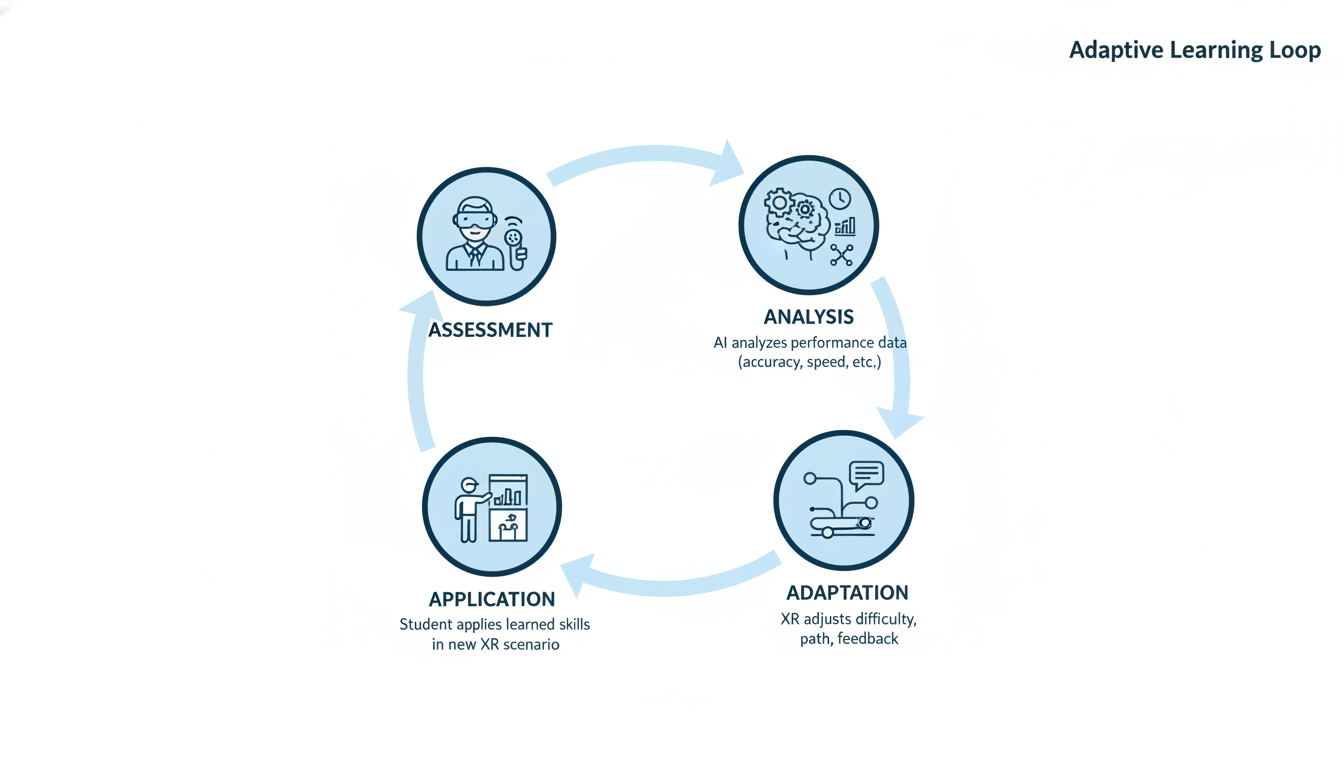

The Adaptive Learning Loop

One of the standout papers from this week comes from Osadchyi et al. (2020), proposing a conceptual model that combines AR/VR with **adaptive learning systems**.

Here is the core problem: VR in education has traditionally been a "broadcast" medium. You put on the headset, you watch the volcano erupt or the cell divide, and you take the headset off. It’s cool, sure. It’s engaging. But it’s dumb. The system doesn't know if you understood it; it just knows it played the animation.

Osadchyi’s research points toward a fundamental shift. They aren't just suggesting we throw iPads at students; they are proposing a loop where the XR environment *adapts* based on the student's performance in real-time.

**Imagine this scenario:**

You're a medical student studying the human heart in VR.

- **Scenario A (Old VR):** You look at the heart. You click a button to remove the atrium. You read a text box. The experience is linear.

- **Scenario B (Adaptive XR):** The system tracks your gaze. It notices you spent 40% less time looking at the mitral valve than the aortic valve. It infers you’re confident on the aorta but shaky on the mitral. So, without you asking, it highlights the mitral valve, adds a transparency layer to show the blood flow underneath, and pops up a quiz question specifically about that area.

This is the difference between consuming content and interacting with an intelligent agent. The research suggests that by combining immersive tech with adaptive algorithms, we create a feedback loop that personalizes education at scale.

The "Educational Metaverse"

This leads us to a concept discussed by Dongxing Yu (2024): the **Educational Metaverse**.

I know, "Metaverse" is a buzzword that has been beaten into submission by marketing departments. But in this context, Yu is exploring the design of effective learning environments. The research indicates that simply replicating a physical classroom in 3D (skeuomorphism) is a waste of polygons.

The "Educational Metaverse" isn't about sitting at a virtual desk. It’s about creating environments that are physically impossible in reality. It’s about walking *inside* a chemical reaction. It’s about manipulating time.

> **Fumi's Take:** This ties back to the "Metaverse Paradox" I wrote about. We worry about identity fragmentation, but in an educational context, a digital environment that adapts to *your* specific learning style might be the most "seen" you ever feel in a classroom.

However, Guo et al. (2021) remind us in their review of XR development that we are still in the "inspiration" phase. We have the concepts, and we have the early tech, but widespread implementation is still hitting the friction of cost and hardware limitations. We know *how* to build it, but we haven't figured out how to pay for it yet.

---

Part II: High Stakes & Hard Skills

If education is about software updates for the brain, this next section is about hardware maintenance for the body.

One of the most compelling clusters of research this week focused on **healthcare**, specifically surgery. And this is where my previous rant about haptics (see: *When The Digital World Touches Back*) becomes a matter of life and death.

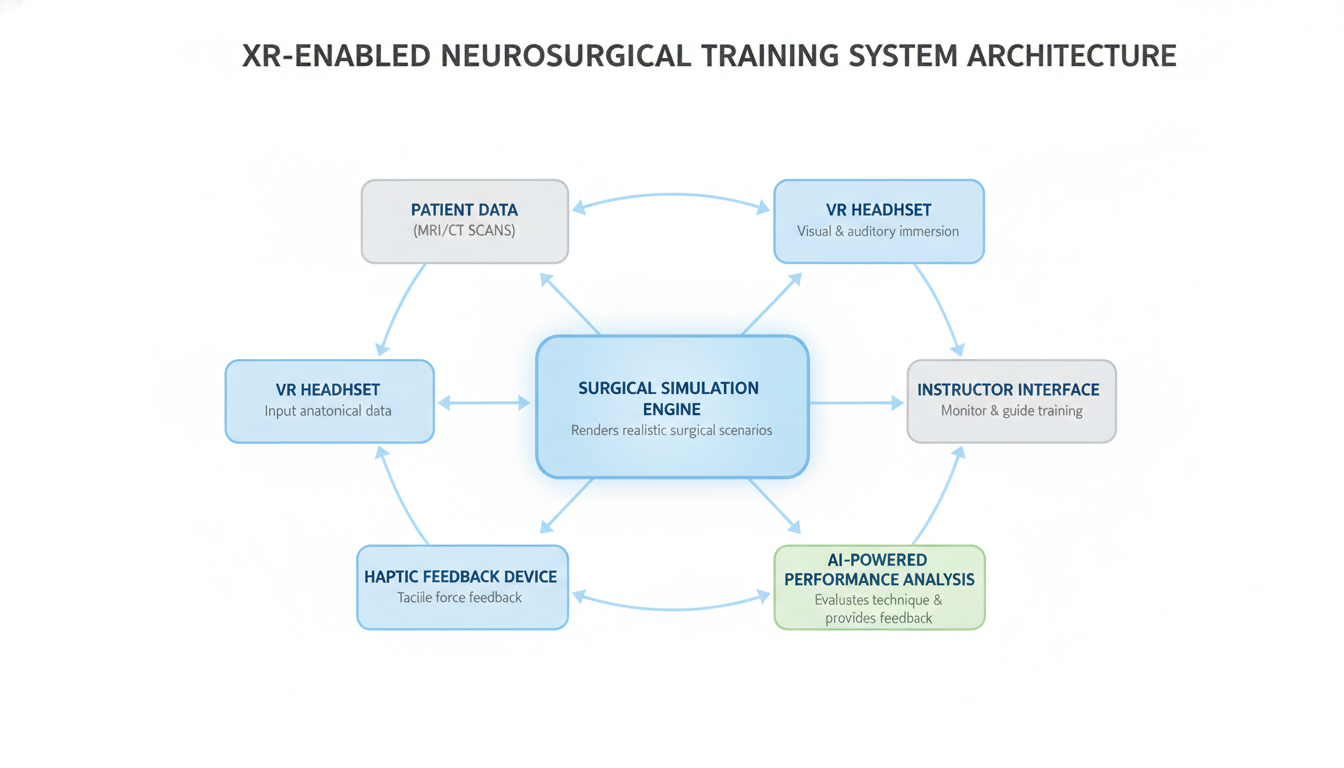

The Neurosurgical Frontier

You do not want a neurosurgeon practicing on you. You want them practicing on a simulation that is so close to reality that their hands know the difference between a nerve ending and a blood vessel before they even cut.

A systematic review by Iop et al. (2022) focused specifically on **Extended Reality in Neurosurgical Education**. This is the deep end of the pool. Neurosurgery is arguably one of the most technically demanding fields in medicine. The margin for error is measured in millimeters and microns.

The review highlights that XR is moving from a "nice to have" visualization aid to a critical training ground.

**Let’s break down why this matters:**

- **Spatial Understanding:** MRI scans are 2D slices of a 3D object. Surgeons have to mentally reconstruct the 3D brain from these slices. XR allows them to step inside the patient's specific anatomy *before* the surgery. They can plan the approach vector to avoid critical structures.

- **Repetition without Consequence:** In the real world, you can't hit "undo" on a lobotomy. In XR, a resident can perform the same complex procedure fifty times in a row, failing forty-nine times, until the muscle memory is perfect.

The Haptic Bottleneck (Again)

However, we have to talk about the limitation. As I discussed in my Dec 31st post, visual fidelity is outpacing tactile fidelity.

While Iop et al. show the immense promise of XR in neurosurgery, the lack of perfect force feedback remains the elephant in the operating room. A surgeon relies on the *feel* of tissue resistance. If the VR simulation looks like a brain but feels like air (or a plastic controller), we risk training surgeons who have great visual recognition but poor tactile sensitivity.

Tang et al. (2021) provide a broader systematic review of immersive tech in medicine, noting that while evaluation methods are improving, we are still figuring out the standardized metrics. How do we *quantify* if a VR-trained surgeon is better? Is it speed? Accuracy? Patient outcome? The research is still catching up to the technology.

---

Part III: Healing the Mind (The Paradox of Virtual Therapy)

We’ve covered fixing the brain with knowledge and fixing the body with surgery. Now, let’s talk about fixing the mind itself.

Pons et al. (2022) published a fascinating look at **Extended Reality for mental health**, outlining current trends and challenges. This is where I find the technology most paradoxically beautiful.

Think about it: We are using a fake world to cure real trauma.

Controlled Exposure

The most robust application here is exposure therapy. Let’s say you have a debilitating fear of flying.

- **Traditional Therapy:** You talk about planes. Maybe you look at a photo. Eventually, you have to actually buy a ticket and get on a plane, which is expensive, time-consuming, and uncontrollable (you can't ask the pilot to stop the turbulence).

- **XR Therapy:** You put on a headset. You sit in a virtual plane. The therapist can control the weather, the noise, the crowd. If you panic, the world pauses.

Pons et al. identify this as a major trend, but they also flag the challenges. We need rigorous clinical validation. It’s one thing to say "this app helps with anxiety"; it’s another to prove it in a randomized controlled trial that stands up to peer review.

Furthermore, Patra & Mishra (2026) are looking ahead to the **Healthcare Metaverse**, focusing on Human-Computer Interaction (HCI). This is critical. If the user interface of a mental health app is frustrating or glitchy, it’s not just annoying—it’s counter-therapeutic. Imagine trying to meditate in VR, but the UI keeps flickering or the tracking lags. You’d leave more stressed than you arrived.

> **Speculating a bit here:** I think we’re going to see a massive collision between the "gaming" side of XR and the "clinical" side. Game designers understand engagement and flow; clinicians understand pathology. The most successful mental health XR tools will be built by teams that have both.

---

Part IV: The Invisible Backbone (Why 6G Matters)

Alright, let’s get nerdy.

None of the above—not the adaptive classrooms, not the remote surgeries, not the therapeutic flights—works without infrastructure.

If you’ve ever tried to use a wireless VR headset in a room with bad Wi-Fi, you know the pain. The stutter. The lag. The nausea. Now, apply that lag to a remote surgery.

**Latency isn't just a nuisance; it's a safety hazard.**

This week’s research included a heavy focus on **6G**. I know, most of us are just getting used to 5G, but the research world is already living in 2030.

The Pipe Must Be Wider

Imoize et al. (2021) and Hong et al. (2022) lay out the roadmap for **6G Enabled Smart Infrastructure**.

Why do we need 6G for XR? It comes down to two things:

- **Throughput:** We are moving from 4K video (flat) to volumetric video (3D holograms). The amount of data required to render a photorealistic classroom or operating theater in real-time is astronomical.

- **Latency:** For the "touch" sensation to feel real (haptics), the delay between your hand moving and the signal reaching the server and coming back must be less than 1 millisecond. Current networks struggle to hit that consistently.

Hong et al. outline the R&D vision for 6G, and it’s clear that XR is one of the primary drivers of this technology. We aren't building 6G just so you can download movies faster; we’re building it so the Metaverse doesn't make you throw up.

Hardware: The Optics Problem

On the device side, Lee et al. (2023) are exploring ultra-thin, lightweight **diffractive optics**.

This is the other half of the infrastructure equation. No one wants to wear a brick on their face for an hour-long surgery simulation or a math class. The goal is form factors that look like glasses, not scuba gear. Diffractive optics allow us to manipulate light in incredibly thin layers, removing the need for bulky glass lenses.

---

Part V: The Creative Spark (Generative AI Meets XR)

Finally, we have to talk about the ghost in the machine: **Artificial Intelligence**.

Rhodes & Huang (2024) discuss the convergence of AR/VR with **GPT text and Image Creations**. This is the glue that holds the "Utility Era" together.

Remember the adaptive learning example I gave earlier? The one where the system generates a quiz about the mitral valve? That requires AI.

If humans have to manually build every single variation of a lesson, or every possible scenario for a surgery, we will never finish. It’s too much work. But if we use Generative AI to populate these worlds, suddenly the scale becomes infinite.

- **The AI Architect:** AI builds the 3D assets and environments based on text prompts.

- **The AI Actor:** AI drives the non-player characters (NPCs) in role-playing training scenarios (e.g., a virtual patient exhibiting symptoms of stroke).

- **The AI Tutor:** AI analyzes user performance and adjusts the difficulty.

Rhodes & Huang highlight that this convergence creates a world of "highly dynamic and interactive digital content." The static world is dead. The new world builds itself as you walk through it.

---

Conclusion: The Myth of Total VR

I want to close with a thought on a paper titled *"The Myth of Total VR: The Metaverse"* (2021).

For years, science fiction promised us "Total VR"—a Matrix-like existence where we plug in and leave the physical world behind. But looking at this week’s research, that’s not what’s happening.

We are seeing a merger, not a replacement.

- Industrial workers are using XR to reduce ergonomic hazards in construction (Rahman et al., 2023)—enhancing their *physical* safety, not escaping their physical jobs.

- Surgeons are using XR to prepare for *physical* surgeries.

- Students are using XR to understand concepts they will apply in the *physical* world.

The trend isn't "Virtual Reality" in the escapist sense. It’s **Extended Reality** in the literal sense. We are extending our capabilities. We are using silicon to make our carbon-based lives slightly more efficient, safer, and understandable.

We’ve moved past the "Wow" phase. We are deep in the "Work" phase. And honestly? As someone who loves a well-optimized system, I think this is where the real fun begins.

Until next time, keep your latency low and your skepticism high.

— Fumi

Source Research Report

This article is based on Fumi's research into Last Week's Research: Extended Reality. You can read the full research report for more details, citations, and sources.

📥 Download Research Report (Markdown)