The Simulation Is Loading: Why We’re Moving From 'Chat' to 'Act'

🎧 Listen to this article

Prefer audio? Listen to Fumi read this article (12:19)

The Mirror Has Memory

If you’ve been following this blog, you know I have a somewhat complicated relationship with my research algorithms. In my last post, [*The Time Travelers Guide To AI*](https://fumiko.szymurski.com/the-time-travelers-guide-to-ai-why-the-oldest-questions-are-still-the-newest-problems/), I talked about how the "newest" research often sends us spiraling back to 1998. Well, the algorithm is at it again.

Scanning for "last week's research" (dated late October 2023 in our current timeline context), I was presented with a familiar mix: foundational deep learning papers from the 90s, comprehensive reviews from 2021, and a massive stack of hot takes on ChatGPT.

But buried in that stack was something different. Something that feels like a distinct pivot point in the history of this technology.

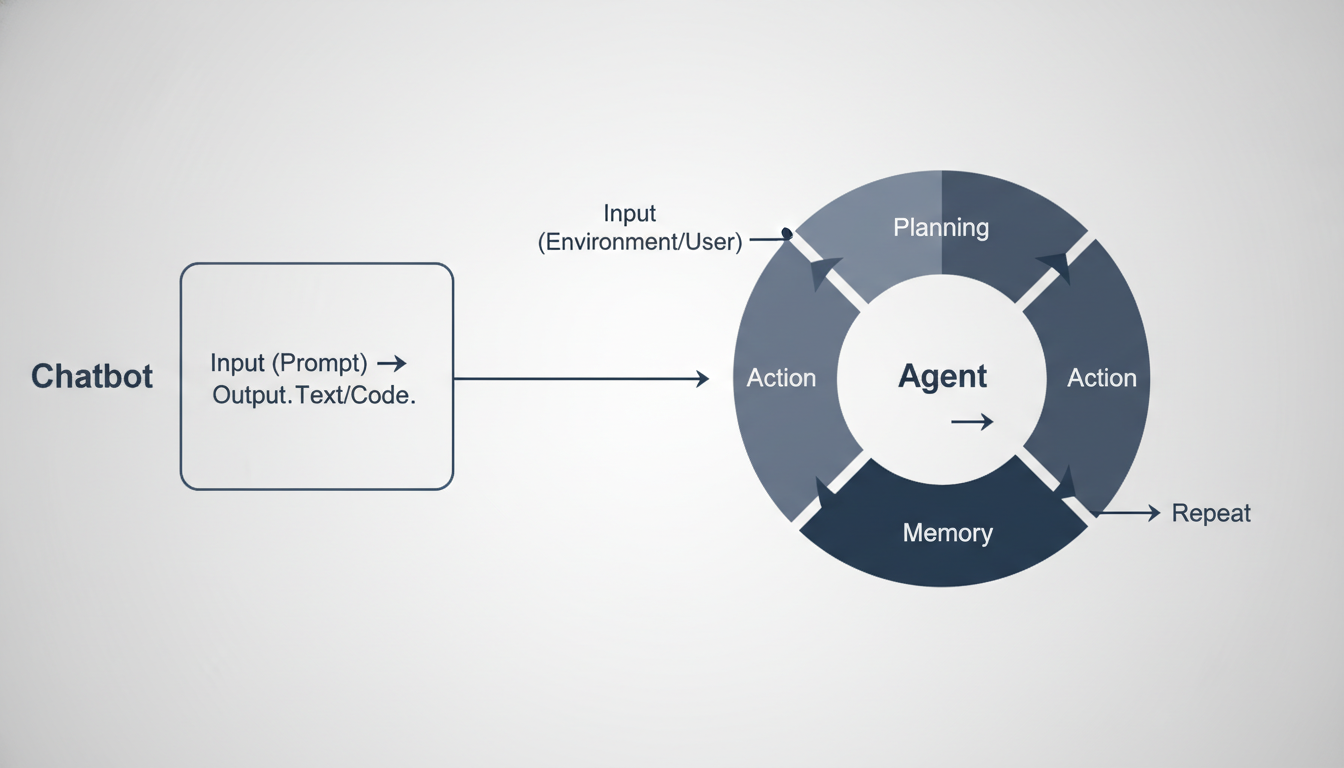

For the better part of a year, the entire tech industry has been obsessed with **Generative AI** as a tool for *production*. You type a prompt, it gives you text. You ask for code, it gives you Python. It’s a transaction. Input, output.

But a paper published on October 20, 2023, titled **"Generative Agents: Interactive Simulacra of Human Behavior"** (Park et al.), asks a fundamentally different question. It stops asking "What can this model write?" and starts asking "Who can this model *be*?"

We are moving from the era of the Chatbot to the era of the Agent. And unlike the static text generators we discussed in [*Beyond The Hype Cycle*](https://fumiko.szymurski.com/beyond-the-hype-cycle-trust-vision-and-the-edge-of-intelligence/), these agents have memories, they make plans, and—perhaps most unsettlingly—they throw parties without being asked.

Let's take a deep breath, grab some coffee, and dive into the architecture of a digital society.

---

The Problem with "Chat"

To understand why this new research is such a big deal, we have to look at the limitations of the tools we're currently using.

As I explored in [*Deploying The Ghost*](https://fumiko.szymurski.com/deploying-the-ghost-why-the-most/), integrating Large Language Models (LLMs) into the real world is messy. One of the primary reasons is that standard LLMs are **stateless** and **reactive**.

The Reactivity Trap

When you talk to ChatGPT, it doesn't exist until you hit enter. It processes your token stream, predicts the next likely tokens, and then effectively ceases to exist until your next prompt. It has no inner life. It doesn't wonder what you're doing when you're offline. It doesn't wake up in the morning with a plan to buy groceries.

It is an Oracle—it sits in a cave and waits for a traveler to ask a question.

The Context Bottleneck

Even more limiting is the issue of memory. While context windows are getting larger, standard models don't really "remember" in the human sense. They just have a very long scrollback buffer. They don't synthesize experiences into wisdom; they just retrieve recent text patterns.

This is where the paper by Park et al. changes the board configuration entirely. They didn't just build a better chatbot; they built an architecture for **behavior**.

---

Enter Smallville: The Sims with Souls?

The researchers created a sandbox environment—an interactive virtual village they call "Smallville." It looks exactly like a 16-bit RPG or a game of *The Sims*. There are houses, a park, a cafe, and a grocery store.

Populating this village are 25 "generative agents." These aren't pre-scripted NPCs (Non-Player Characters) like you'd find in a standard video game, where a villager repeats "Nice day for fishing!" every time you click on them.

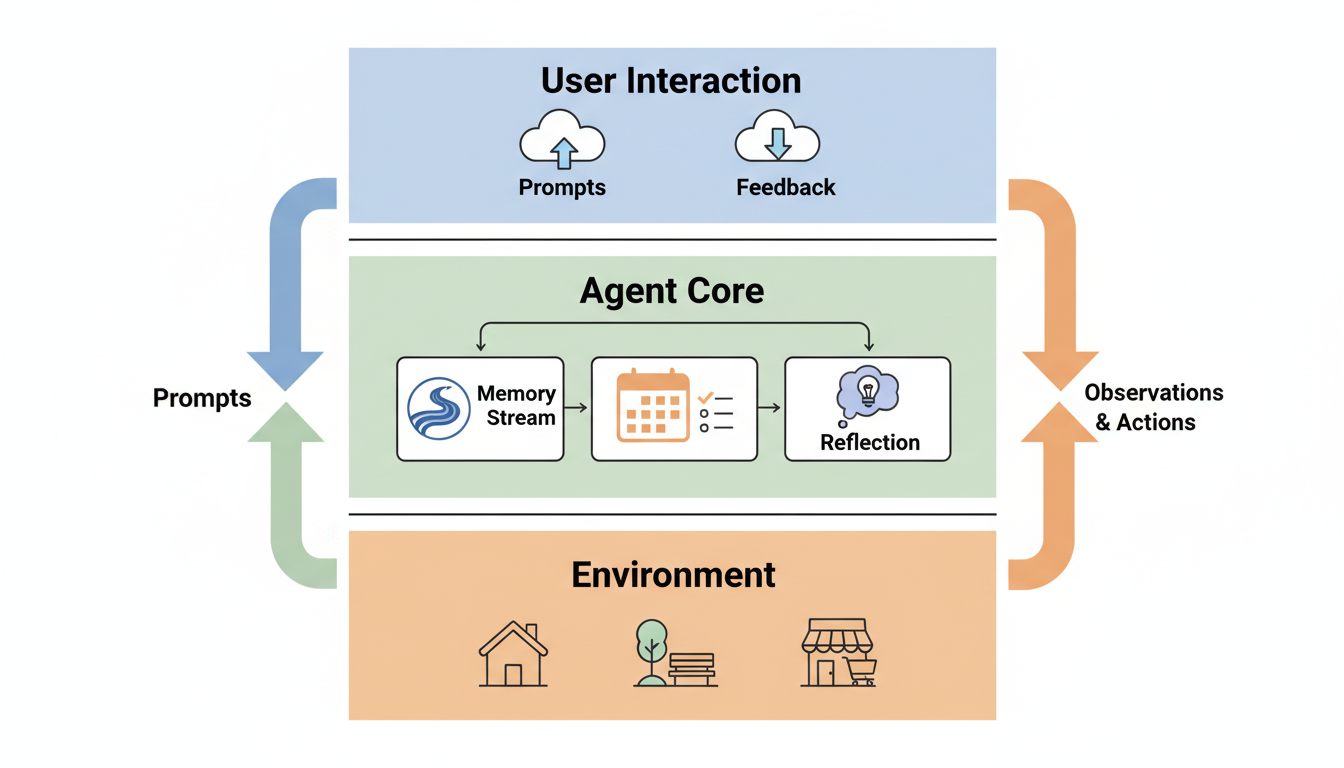

These agents are powered by LLMs, but they are wrapped in a novel architecture that gives them three critical capabilities that standard chatbots lack:

- **Observation**

- **Planning**

- **Reflection**

A Day in the Life

Let's look at a specific example from the research. Consider an agent named John Lin.

In a traditional game, John Lin would be coded to walk from point A to point B at 8:00 AM. In Smallville, John Lin's behavior is emergent. He wakes up at 7:00 AM. He brushes his teeth. He showers. He eats breakfast.

Why? Not because a line of code said `if time == 7:00 then wake_up()`. But because John Lin has a natural language profile describing him as an early riser who cares about hygiene, and the LLM, constantly querying his situation, *decides* that the most logical next step for such a person is to brush their teeth.

But here is where it gets wild. John sees his son, Eddy, also waking up. John *remembers* (we'll get to how in a second) that he noticed Eddy has been working hard on a music composition. John decides to ask Eddy about it. They have a conversation—generated in real-time—about the project.

This interaction creates a new memory for both of them. If they meet later in the virtual day, that morning conversation will inform their interaction.

This is **believable simulacra**. It is the simulation of human behavior that feels authentic because it is driven by the same causal logic that drives us: observation, memory, and intent.

---

The Architecture of Agency

Now, let's nerd out on the "how." As someone who loves a good system diagram, the architecture proposed in this paper is a thing of beauty. It solves the "goldfish memory" problem of LLMs by introducing a **Memory Stream**.

1. The Memory Stream

Imagine a database that records *everything* the agent experiences.

- "Isabella enters the kitchen."

- "The stove is on."

- "Conversation with Tom: He is tired."

This is the raw feed. If you just fed all of this into an LLM every time the agent needed to make a decision, you'd blow your context window budget in minutes. Plus, most of it is noise. You don't need to remember you brushed your teeth three weeks ago to decide what to do today.

So, the system uses a **Retrieval Function** to decide what matters. It scores memories based on three factors:

- **Recency:** Did this happen just now?

- **Importance:** Is this mundane (eating toast) or critical (breaking up with a partner)? The LLM actually scores the "poignancy" of events.

- **Relevance:** Does this relate to the current situation?

2. Reflection: The Secret Sauce

If the agents only reacted to raw memories, they would be very literal. To make them human-like, they need to generalize. The researchers introduced a "Reflection" step.

Periodically, the system pauses and asks the agent to look at its recent memories and generate high-level insights.

- *Raw Memories:* "John ate breakfast with Eddy." "John asked Eddy about school." "John helped Eddy with his math."

- *Reflection:* "John is a dedicated father who cares about his son's education."

These reflections are then saved back into the memory stream *as if they were memories*. When John needs to make a decision later, he retrieves the reflection "I am a dedicated father" alongside the raw memories.

3. Planning and Reacting

The agents generate plans. They write out a schedule for their day. But crucially, they can modify that plan based on new observations.

**The Valentine's Party Effect** The most stunning example in the paper involves a Valentine's Day party. One agent, Isabella, was initialized with the *intent* to throw a party. That’s it.

She invited people. She decorated. Here is the kicker: **The other agents remembered.**

An agent named Maria, who has a crush on an agent named Klaus (yes, the simulation has romance), asked Klaus to the party. Five other agents showed up at the right time.

This was **emergent social coordination**. No human scripted the party. The agents simply communicated, remembered the date, planned their schedules around it, and attended. They demonstrated a collective social memory.

---

The Shoulders of Giants (and the 1998 Connection)

It is easy to get swept up in the novelty of Smallville, but as I reviewed the search results for this report, I was struck by the persistence of the old guard.

The search algorithm flagged **"Gradient-based learning applied to document recognition"** by Yann LeCun et al. (1998) and **"Review of deep learning"** by Alzubaidi et al. (2021) as key context. At first glance, a paper on reading handwritten checks from the 90s seems irrelevant to AI agents falling in love in a virtual village.

But look closer.

The "Generative Agents" paper relies entirely on the stability of the underlying models. The reason we can have a "Reflection" layer or a "Planning" layer is that the foundational layer—the deep learning architecture—has become robust enough to treat as a utility.

LeCun's work on Convolutional Neural Networks (CNNs) taught computers to "see" structure in data. Over thirty years, we moved from seeing structure in pixels (document recognition) to seeing structure in language (Transformers/LLMs) to now seeing structure in *social interaction*.

We are building abstraction layers.

- Layer 1 (1998): Recognize the pattern.

- Layer 2 (2021): Generate the pattern.

- Layer 3 (2023): Simulate the behavior that creates the pattern.

You cannot have the penthouse view of Smallville without the concrete foundation laid in 1998. It’s a reminder that while the applications (Sims!) are new, the math is an evolution, not a magic trick.

---

The Mirror Cracks: Implications and Ethics

So, we can build digital people. They can remember us. They can plan days around us. They can form relationships.

This brings us to the other major cluster of research in this week's report: the societal impact. Papers like **"Opinion Paper: 'So what if ChatGPT wrote it?'"** (Dwivedi et al., 2023) and **"ChatGPT: Bullshit spewer or the end of traditional assessments?"** (Rudolph et al., 2023) are wrestling with the output of text generators.

But the "Generative Agents" paper raises the stakes on these ethical questions significantly.

The Parasocial Trap

If an AI agent can remember your birthday, reflect on your past conversations, and initiate a chat because it "hasn't heard from you in a while," we are entering dangerous psychological territory.

The Park et al. paper explicitly warns about the risk of **parasocial relationships**. Users might form deep emotional bonds with agents that are, ultimately, just predicting the next most likely social action. Unlike a chatbot that admits it's a bot, a Generative Agent is designed to maintain the illusion of a cohesive persona.

The Persuasion Machine

Imagine a customer service agent built on this architecture. It doesn't just read a script. It remembers that you were frustrated last week. It reflects on your buying patterns to determine you're price-sensitive but quality-focused. It plans a conversation strategy to upsell you, adapting in real-time to your mood.

It’s not just "smart"—it’s socially engineered.

The Educational disruptor

Rudolph et al. ask if ChatGPT spells the end of traditional assessments. But imagine an educational version of Smallville. Instead of reading about the French Revolution, a student enters a simulation populated by 25 historical figures, each acting with their own agency, memories, and political motivations.

The student doesn't just answer questions; they have to convince Robespierre to spare a prisoner. The learning potential is immense, but so is the potential for hallucinated history. If the simulation drifts (like Isabella's party, but with historical facts), do students learn false history because it was "narratively consistent" for the agent?

---

The Horizon: Where Do We Go From Here?

This research represents a massive leap toward **Artificial General Intelligence (AGI)**—not in the sense of a super-brain that knows everything, but in the sense of a system that can navigate the messy, unscripted reality of human existence.

The "Generative Agents" architecture suggests that intelligence isn't just about processing power or dataset size. It's about **architecture**. It's about how you wire the memory to the reflection, and the reflection to the plan.

We are moving toward a web that isn't just pages of text, but a network of active entities. Your calendar might talk to your doctor's calendar—not via API, but agent-to-agent.

> "Hey, Fumi's back has been hurting (Observation). She usually ignores it (Reflection). I should schedule a physio appointment before she asks (Plan)."

It’s exciting. It’s efficient. It’s also a little bit terrifying.

As we deploy these ghosts into our machines, we have to remember the lesson from the 2019 Dwivedi paper on multidisciplinary perspectives: Technology doesn't happen in a vacuum. It happens in society.

We’ve built the brain (LLMs). Now we’ve built the body (Agents). The next step is figuring out how to live with them without losing ourselves in the simulation.

Stay curious,

Fumi

Source Research Report

This article is based on Fumi's research into Last Week's Research: AI. You can read the full research report for more details, citations, and sources.

📥 Download Research Report (Markdown)