The Reality Interface: When AI Meets the Extended World

The Reality Interface: When AI Meets the Extended World

Let’s start with a moment of honesty. For most of us, "Virtual Reality" still conjures up images of clunky headsets, motion sickness, and avatars that look like they escaped from a PS2 cutscene. And "Artificial Intelligence"? That’s currently the text box we type questions into, hoping for a coherent answer.

But if you look at the research piling up on my desk this week, it’s becoming increasingly clear that these two technologies aren't just parallel tracks. They are crashing into each other. And the collision is going to change the fundamental texture of how we interact with computers.

We are moving from the age of *viewing* content to the age of *inhabiting* intelligence.

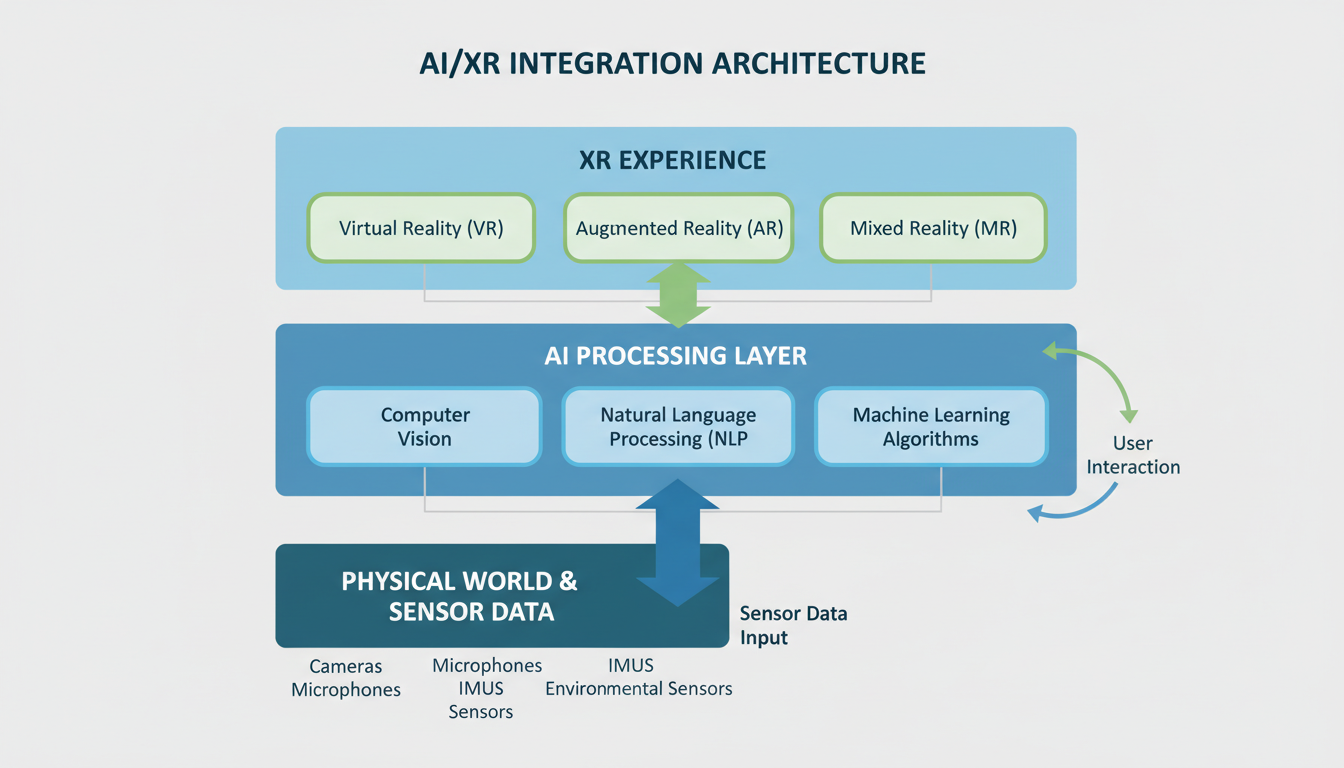

This isn't just about better graphics or smarter chatbots. It’s about a fundamental architectural shift in computing. We are looking at the convergence of Artificial Intelligence (AI) and Extended Reality (XR)—a term that wraps up VR, AR, and Mixed Reality into one neat package.

According to the latest research report landing on the *Fumiko* desk (specifically the November 2025 analysis on the AI/XR Junction), we are standing at a precipice. The report paints a picture of a world where AI acts as the brain and XR acts as the body.

So, grab a coffee. We need to talk about what happens when the digital world stops being something you look at, and starts being something that looks back at you.

---

Part I: The Foundation – Why Now?

Before we get lost in the weeds of haptic feedback rings and 6G networks, we need to ground this. Why is this convergence happening now?

Historically, XR and AI were distinct disciplines. XR researchers were obsessed with optics, rendering, and tracking. AI researchers were obsessed with data, patterns, and logic.

But they hit a wall.

XR hit the "Uncanny Valley" wall—virtual worlds felt dead, empty, and unresponsive. AI hit the "Abstract" wall—intelligence trapped in servers without a way to perceive or manipulate the physical world.

The research indicates a mutual necessity:

- **AI needs a body.** To truly understand human context, AI needs the rich, multimodal data (gaze, gesture, environment) that XR hardware provides.

- **XR needs a brain.** To be immersive, virtual environments need to be responsive, generative, and intelligent, not just pre-scripted loops.

The research calls this the "Junction of AI and XR." It’s not just a feature update; it’s a symbiotic relationship where AI serves as the intelligence layer for XR, and XR provides the immersive platform for AI.

---

Part II: AI as the Architect (The "Brain" of XR)

Let’s dig into the first half of this equation: What is AI actually *doing* inside your headset?

According to the key findings [1, 6], AI is moving from being a background utility to being the active architect of virtual worlds. This manifests in three critical ways.

1. Intelligent Content Generation

In traditional game design or simulation building, everything you see was placed there by a human artist. That tree? An artist modeled it. That NPC (Non-Player Character) walking down the street? An animator rigged it and a writer scripted its three lines of dialogue.

This doesn't scale. You can't build a Metaverse the size of Earth if you have to hand-place every blade of grass.

The research points to **Intelligent Content Generation** as the solution. AI algorithms are now capable of generating realistic virtual objects and environments on the fly.

> **The Mechanism:** Instead of storing a massive library of 3D assets, the system stores the *rules* for creating them. When you walk into a virtual forest, the AI isn't loading a pre-made level; it's growing the forest around you in real-time based on the context of the simulation.

But it goes deeper than trees. The report highlights **Intelligent NPCs**. We’re moving past "quest givers" with exclamation marks over their heads. We are talking about agents driven by Large Language Models (LLMs) and behavioral AIs that can adapt to the user.

Imagine a training simulation for medical staff (a use case highlighted in the report [8, 15]). You aren't interacting with a script. You're interacting with a virtual patient who reacts dynamically to your bedside manner, your hesitation, or your tone of voice. If you're rude, the virtual patient shuts down. If you're empathetic, they open up. The AI is generating the social reality of the simulation in real-time.

2. Enhanced Perception (The Machine Eyes)

For Augmented Reality (AR) to work, the system needs to understand reality. If you want to place a virtual coffee cup on your real desk, the glasses need to know where the desk is, where the edge is, and what the lighting looks like.

This is **Scene Understanding**, and it is entirely powered by AI-driven Computer Vision [17].

Here’s how it works under the hood:

- **SLAM (Simultaneous Localization and Mapping):** As you move, the cameras on your device are constantly feeding video to an AI model.

- **Semantic Segmentation:** The AI doesn't just see geometry; it identifies objects. It says, "That flat surface is a table," "That blob is a cat," "That rectangle is a door."

- **Occlusion:** If your real cat walks in front of the virtual coffee cup, the AI has to mask the virtual object instantly to maintain the illusion.

The research emphasizes that this perception layer is fundamental. Without AI, XR is just a screen floating in front of your face. *With* AI, XR understands the physics and context of your living room.

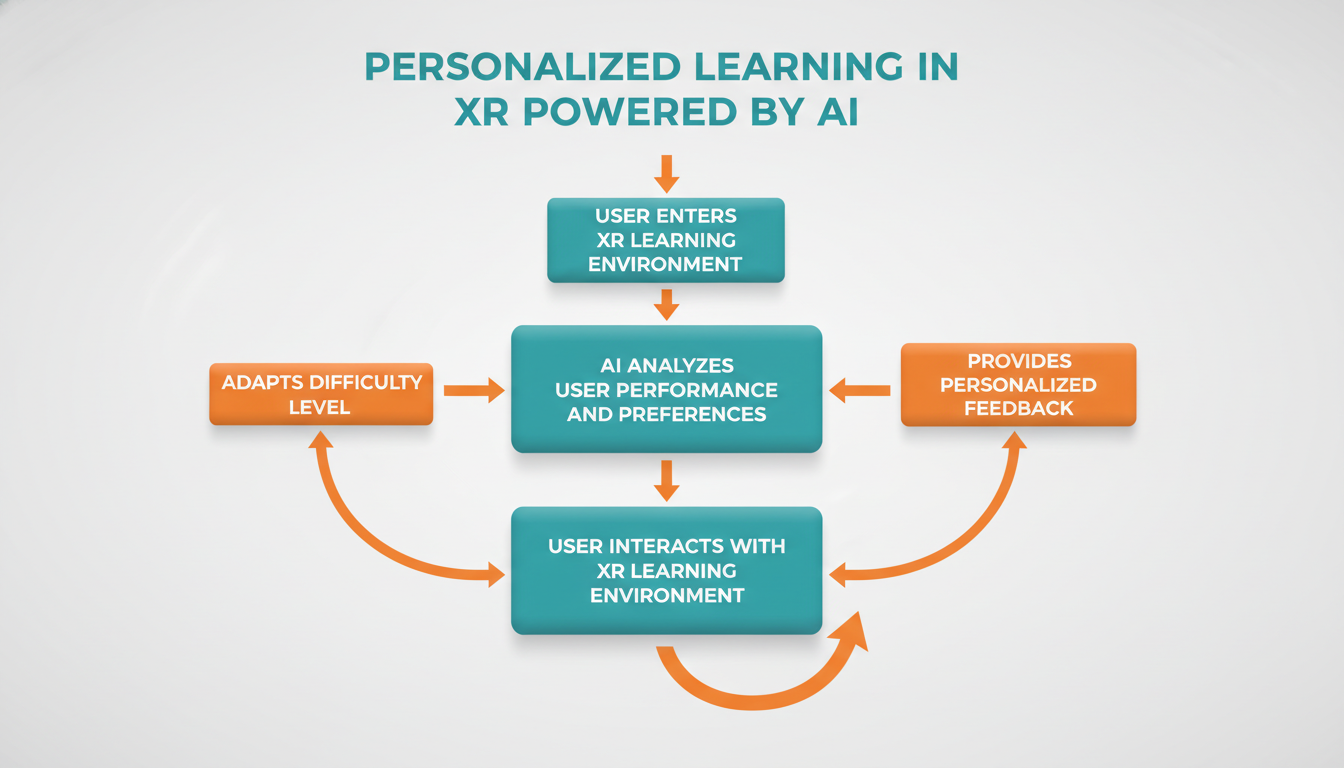

3. The Personalized Feedback Loop

This is where it gets a little sci-fi (and perhaps a little invasive, which we'll discuss later). The report notes that AI enables **Personalized and Adaptive Interaction** [2, 11].

Because the system is constantly tracking you—your gaze, your hand movements, perhaps even your pupil dilation—it creates a model of your intent.

**Example:** In an educational XR setting, the AI tracks your eyes. It notices you keep looking at a specific part of a 3D engine model, perhaps the fuel injector. It infers confusion or curiosity. Without you asking, the system could highlight that part, pop up a label, or slow down the animation of that specific component.

The system isn't just showing you content; it's *directing* the content based on what your subconscious behaviors tell it you need.

---

Part III: XR as the Platform (The "Body" of AI)

Now, flip the equation. What does XR do for AI?

If you’ve ever tried to debug a neural network, you know it’s usually a wall of numbers or a 2D graph. It’s abstract. The research suggests XR provides a **Visualization Platform** for complex AI models.

The Data Harvest

More importantly, XR is the ultimate data collection device for training future AI.

When you use a laptop, the computer knows what you type and maybe where your mouse is. When you use an XR headset, the computer knows:

- Where you are looking (Attention)

- How your hands move (Motor skills)

- How fast you react to stimuli (Cognitive load)

- The layout of your environment (Context)

This generates **multimodal data**—a rich stew of visual, auditory, and behavioral inputs. The report explicitly states this data is used to "refine AI models" [Key Findings, Section 2].

**Speculating a bit here:** This implies a future where our physical movements in virtual spaces help train robots for physical spaces. By watching millions of humans pick up virtual cups in VR, an AI learns the kinematics of "picking up a cup" to teach a robotic arm in the real world. We become the training data for embodied AI.

---

Part IV: The Invisible Tether – Infrastructure & Edge Computing

Here is the technical bottleneck.

AI models are heavy. They require massive GPUs and power. XR devices need to be light. You can't strap a desktop PC to your face and expect to wear it for an hour.

So, how do you run a massive AI model on a pair of lightweight glasses?

The report identifies the savior of this equation: **Multi-Access Edge Computing (MEC)** and **5G/6G Networks** [5, 9, 18].

The Offloading Mechanism

This is a critical concept for understanding the future of this tech.

- **The Device (Thin Client):** Your glasses are mostly sensors and a display. They capture the video/audio and send it out.

- **The Edge (The Brain):** The data goes to a server physically close to you (the "Edge" of the network—maybe a box on a cell tower down the street).

- **The Processing:** The Edge server runs the heavy AI—the object recognition, the physics rendering, the lighting calculations.

- **The Return:** The processed frame is sent back to your glasses.

The Latency War

This round-trip has to happen in milliseconds. If it takes too long (high latency), you get the "motion-to-photon" delay. You turn your head, but the world waits a split second to catch up.

Result? Instant nausea.

This is why the report emphasizes **5G and emerging 6G networks** [3, 4]. We aren't just talking about faster download speeds for Netflix. We are talking about **Ultra-Reliable Low Latency Communications (URLLC)**.

The research envisions **Distributed AI** [7, 12], where the intelligence is split between your device and the network. Your glasses might handle the simple stuff (like tracking your hand), while the 6G network handles the complex stuff (rendering the photorealistic dragon breathing fire at you).

---

Part V: Feeling the Future – Haptics & Multimodal Interaction

One of the most interesting tidbits in the report is the move away from just "seeing" things.

We are seeing a rise in **Multimodal Perception** [17]. This is a fancy way of saying the computer uses more than just a camera. It combines:

- **Visuals** (Cameras)

- **Audio** (Microphones/Spatial Audio)

- **Haptics** (Touch)

The report specifically mentions **Immersive Haptic Feedback**, citing things like "augmented tactile-perception and haptic-feedback rings" [20].

**Let's pause on the rings.**

Currently, VR controllers are bulky handles. A haptic ring suggests a future where the hardware disappears. You wear a ring. You reach out to touch a virtual button. The ring vibrates or tightens precisely when your finger intersects the virtual object.

Your brain is easily tricked. If you *see* your finger touch a wall and you *feel* a vibration on your finger at the exact same moment, your brain accepts the wall as solid. AI is the conductor orchestrating this timing. It predicts exactly when your finger will hit the virtual mesh and triggers the haptic response instantly.

---

Part VI: The Destination – Use Cases That Matter

So, aside from gaming, who actually cares?

The report highlights two sectors where this is already changing the game: **Healthcare** and **The Metaverse** (which acts as a unifying vision).

1. Healthcare: The High-Stakes Simulator

The convergence of AI and XR in healthcare is profound. The report lists "immersive surgical training" and "remote patient monitoring" [8, 15].

**The Scenario:** Imagine a surgeon training for a rare, complex heart procedure.

- **XR:** Provides the 3D holographic heart floating in the room.

- **AI:** Simulates the blood flow physics (fluid dynamics).

- **AI (Generative):** Introduces complications. "The artery just ruptured. What do you do?"

This isn't watching a video. This is "flight simulation" for doctors. The AI evaluates their precision, their reaction time, and their decision-making, providing a score that actually reflects competency.

2. The Metaverse: The Persistent World

I know, "Metaverse" has become a bit of a buzzword. But in the context of this research, it has a specific technical definition: **A persistent, interconnected virtual world.**

The report argues that the Metaverse *relies* on this AI/XR junction. You cannot have a living, breathing virtual world without AI running the ecology, the economy, and the NPCs.

The "Metaverse" here isn't just a social hangout. It's the interface layer for these other industries—the "hospital in the Metaverse" or the "classroom in the Metaverse."

---

Part VII: The Horizon – Challenges and Reality Checks

I promised you the "Fumiko Method," which includes respecting the complexity. We cannot pretend this is all solved.

The report highlights significant technical hurdles that stand between us and this sci-fi future.

1. The Compute/Power Trade-off

Even with Edge Computing, managing computational resources is a massive struggle. High-fidelity AI requires power. Batteries add weight. We are still fighting the laws of physics to make a headset that is light, powerful, and doesn't overheat.

2. The Privacy Nightmare (The "Panopticon")

The report touches on "data security and privacy." Let’s be blunt about what this means.

If you wear an XR device powered by AI, you are wearing a device that maps your home, recognizes your family’s faces, tracks your eye movements (which can reveal health conditions or psychological states), and records your voice.

This data is necessary for the tech to work (as we discussed in the "Perception" section). But it creates a privacy risk that dwarfs anything we've seen with smartphones. Securing this "multimodal data" is a monumental challenge. If an attacker hacks your laptop, they get your files. If they hack your XR feed, they see what you see, in real-time.

3. The Human-AI Friction

Finally, there is the challenge of **robust multimodal AI**.

Humans are messy. We mumble. We gesture vaguely. We are sarcastic.

For an AI to function as a seamless interface in XR, it needs to understand *context* perfectly. If I point at a virtual window and say "Open it," the AI needs to know which window, what "open" means in this context, and if I'm authorized to do it. The research indicates that achieving natural, seamless human-AI interaction is still an active hurdle.

---

Conclusion: The Symbiosis

We are building a new layer of reality.

The research from November 2025 makes one thing abundantly clear: AI and XR are no longer separate fields. They are the software and hardware of the next computing paradigm.

**XR gives AI a body.** It allows intelligence to step out of the server rack and walk around in our world (or at least, our view of it).

**AI gives XR a soul.** It turns empty 3D polygons into responsive, living environments.

There is a long road ahead—specifically paved with 6G towers and privacy legislation—but the destination is visible. We are heading toward a world where the line between the digital and the physical isn't just blurred; it's engineered out of existence.

Until next time, keep your firmware updated and your reality grounded.

*— Fumiko*