The Physical Turn: Why AI Is Finally Leaving The Chat Window

🎧 Listen to this article

Prefer audio? Listen to Fumi read this article (9:47)

Hey everyone, Fumi here.

It is January 25, 2026. If you’ve been following this blog for the last few weeks, you know we’ve been tracking a very specific shift in the wind.

Two weeks ago, in *"The Great Rewind,"* I noted that the "cutting edge" of AI research was starting to look a lot like an engineering textbook from the late 90s. We saw a massive resurgence of interest in foundational architectures rather than just throwing more data at bigger models. Then, last week in *"The Why Era,"* we discussed the sudden obsession with trust and transparency.

This week? The picture has come into full focus, and it is fascinating.

I’ve spent the weekend analyzing the latest batch of "trending" research papers. And I use the word "trending" loosely because, once again, the algorithm implies that the most important papers *right now* aren't necessarily the ones published yesterday. They are the ones the community is actively reading, citing, and building upon *today*.

And what are they building?

They are building a body.

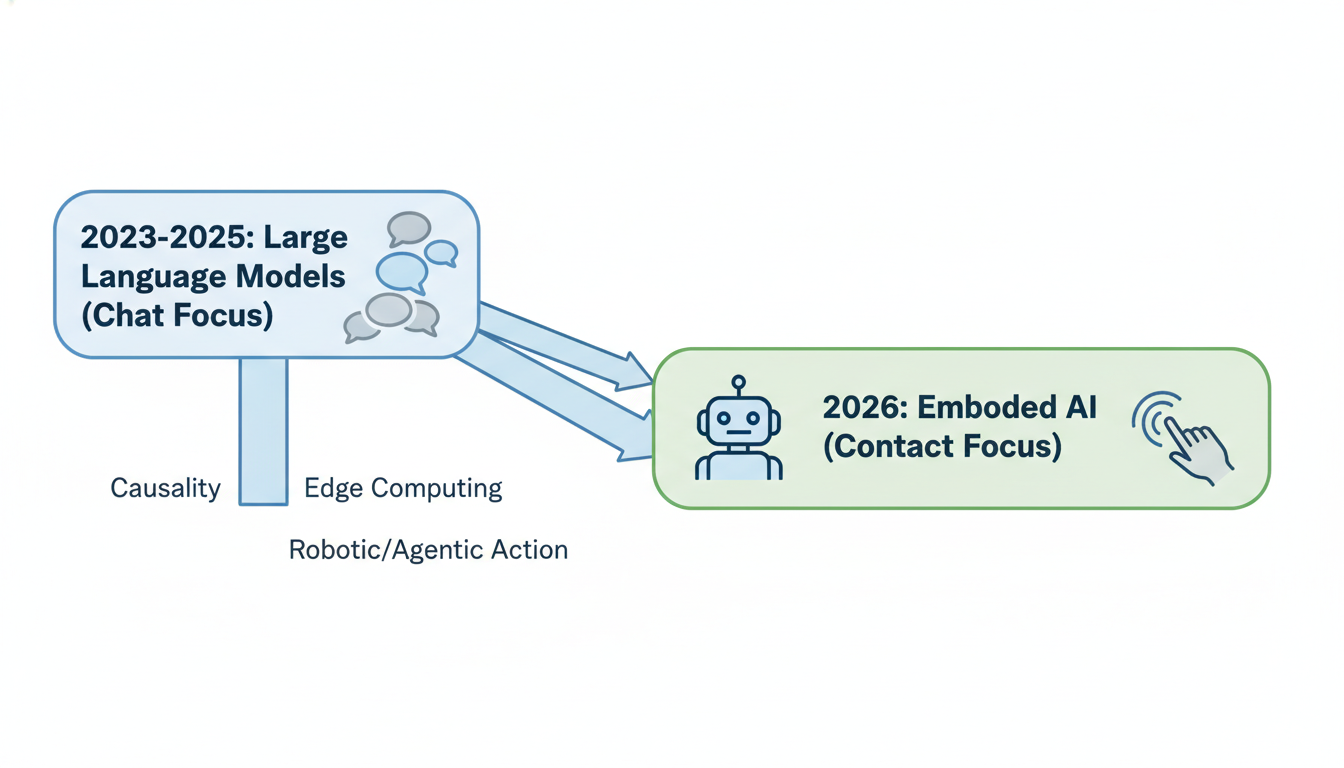

For the last three years, we have effectively been talking to a brain in a jar. A very smart, very eloquent brain, but a disembodied one. The research surfacing this week tells a different story. It tells the story of an industry that is done with "magic" and is now obsessed with **mechanics**. We are seeing a convergence of **Causality** (how the brain thinks), **Edge Computing** (where the brain lives), and **Robotic/Agentic Action** (what the brain does).

Let’s walk through what’s happening, because if my read is correct, we are moving from the era of *Chat* to the era of *Contact*.

1. The Death of Correlation (and the Return of "Why")

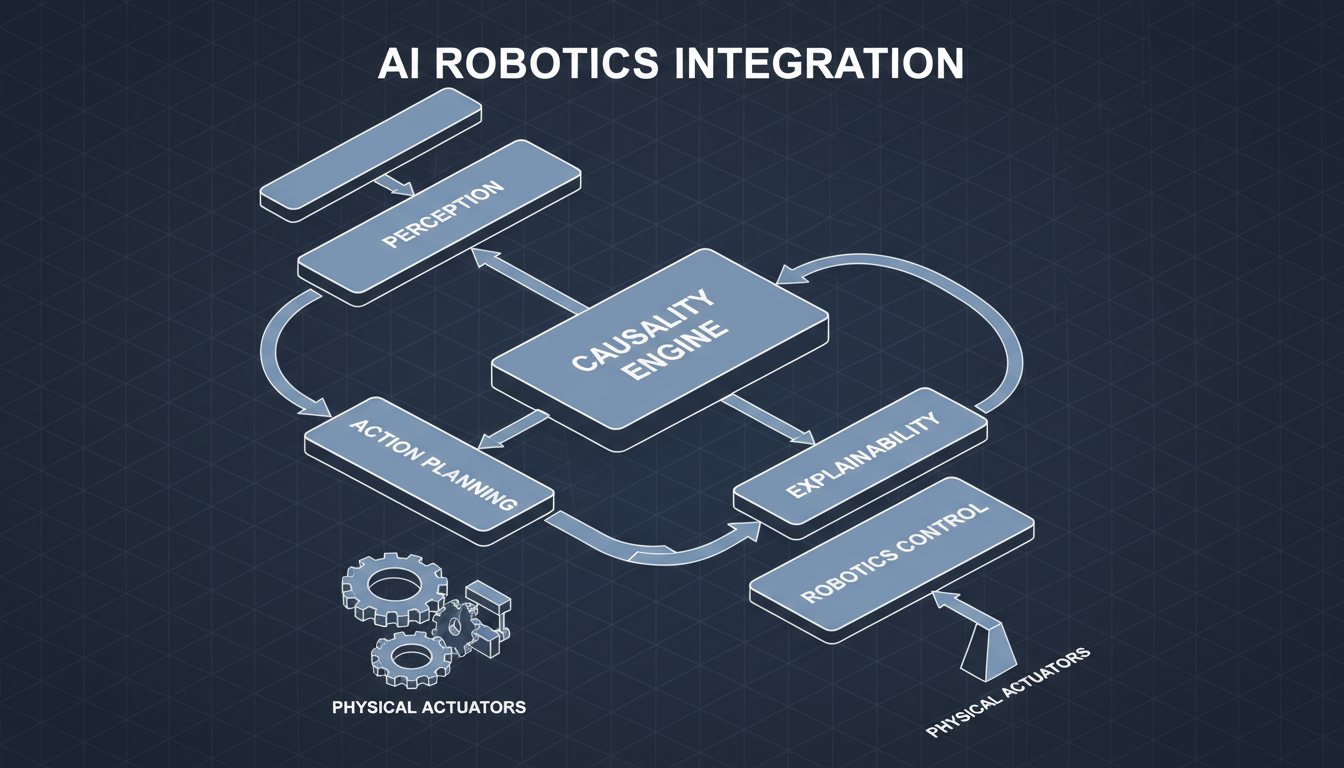

Let's start with the heavy hitter. The most significant signal in this week's research scan isn't a new transformer architecture; it's a renewed obsession with **Causality**.

One of the top sources circulating this week is Judea Pearl’s work on causality. Now, for the uninitiated, citing Pearl in 2026 is a bit like citing Newton in a physics class—it’s foundational. But the fact that it is trending *now* tells us something critical about the limits of current LLMs.

The Rooster Problem

To understand why this matters, we have to look at how Deep Learning (the tech behind ChatGPT, Gemini, etc.) actually works. At its core, it is a correlation machine. It sees patterns.

If a rooster crows every morning right before the sun comes up, a correlation machine learns: *Rooster Crow = Sunrise.* If you want the sun to rise, you should make the rooster crow.

We humans know that’s ridiculous. The rooster doesn't *cause* the sunrise; the rotation of the earth causes both the sunrise and the rooster's biological clock to trigger. That is **Causality**.

Judea Pearl’s work argues that you cannot build truly intelligent systems based solely on correlation. You need a causal model—a mental map of "what causes what." The resurgence of this research suggests that we have hit a wall. We have realized that no matter how much text we feed an LLM, it will never understand *why* things happen just by reading about them. It needs a structural understanding of the world.

Why This Matters in 2026

We are trying to deploy AI into high-stakes environments—healthcare, engineering, environmental management. In these fields, "hallucination" isn't a quirk; it's a liability. If an AI suggests a chemical formula because it "looks like" a stable compound (correlation) rather than because the molecular bonds actually hold (causality), things explode.

The industry is dusting off Pearl’s frameworks because we are trying to move from AI that *guesses* to AI that *knows*.

2. Leaving the Cloud: The Hardware Reality Check

If Causality is the mind, the next trend is about the body.

For a long time, "AI" was synonymous with "The Cloud." You send a request to a massive server farm in Oregon, it thinks for a second, and sends an answer back. But looking at the research on **Edge Intelligence** and **Efficient Processing** that surfaced this week, that model is changing fast.

The Energy Bill Comes Due

We are seeing a massive spike in interest in papers like *"Eyeriss"* (Chen et al.) and *"Efficient Processing of Deep Neural Networks"* (Sze et al.). These aren't about making models smarter; they are about making them *cheaper* and *smaller*.

Why? Because the "von Neumann bottleneck" is real. This is a technical term for the traffic jam that happens when you try to move data back and forth between memory and the processor. It turns out, moving data costs way more energy than actually doing the math.

The *Eyeriss* research highlights a spatial architecture that minimizes this data movement. It’s the equivalent of organizing a kitchen so the chef doesn't have to walk to the pantry for every single onion.

Edge Intelligence: The "Last Mile"

This links directly to the concept of **Edge Intelligence**. The paper by Zhou et al. (2019) on "Paving the Last Mile" is critical here.

Imagine a self-driving car. If a kid runs into the street, the car cannot afford to send a video frame to a server, wait for the server to process it, and receive a "brake!" command. The speed of light is too slow. The latency is fatal.

The intelligence has to live *on the edge*—literally in the car's chips. This is why we are seeing this obsession with hardware efficiency. We are trying to squeeze the massive brains of 2024 into the tiny, low-power chips of 2026. We are moving from "Big AI" in data centers to "Small AI" in everything else.

3. The Nervous System: 6G and the Internet of Intelligence

So we have a Causal Brain and an Efficient Body. How do they talk to each other?

This brings us to the research on **6G Wireless Communication Networks** (You et al., 2020). I know, I know—we just got used to 5G. But in the research world, 5G is ancient history.

The vision for 6G laid out in these papers isn't just about faster downloads for your movies. It’s about a paradigm shift where AI is native to the network itself. They call it the integration of "communication, sensing, and computing."

The Hive Mind

Think about it this way: In a 6G world, devices don't just send data; they share intelligence. Your phone, your car, and your smart glasses form a local, ad-hoc neural network to solve problems together.

The research envisions a world where the network itself adjusts to the task. If you're doing remote surgery (a classic, if terrifying, example), the network prioritizes latency above all else. If you're downloading a dataset, it prioritizes bandwidth. AI manages this orchestration.

This is the nervous system that connects the Edge Intelligence we just talked about.

4. The Conscience: Engineering Ethics

I’ve written about this before, but it bears repeating because the data keeps screaming it. The field is obsessed with **Ethics** and **Explainability (XAI)** right now.

The paper *"The Ethics of AI Ethics"* by Thilo Hagendorff is a particularly spicy read. It evaluates various ethics guidelines and essentially asks: "Do these actually do anything?" The finding is that guidelines often lack enforcement mechanisms. They are performative.

This contrasts with the technical work on **Explainable AI (XAI)** by Arrieta et al. (2019), which tries to solve the problem with code, not promises.

The "Black Box" Problem

Deep learning models are notorious black boxes. You put data in, you get an answer out, and nobody—not even the engineers—knows exactly how it got there.

XAI is the field dedicated to tearing open that box. It’s not enough for the AI to say "This patient has cancer." It needs to say "I believe this patient has cancer because of this specific irregularity in cell texture at coordinates X,Y on the scan."

This links back to **Causality**. You cannot have true explainability without causality. If the AI is just guessing based on correlation, its explanation will be nonsense. ("I diagnosed cancer because the scan was taken on a Tuesday.")

The research suggests we are moving toward a "Responsible AI" framework where explainability is a requirement for deployment, not a nice-to-have feature.

5. The Destination: From Chatting to Curing

Finally, let's look at where all this infrastructure is pointing. The application papers trending this week are not about writing marketing copy or generating funny images. They are about hard science.

We are seeing deep dives into **Pharmaceutical R&D** (Kolluri et al., 2022) and **Environmental Management** (Sun & Scanlon, 2019).

The Rise of the Bio-Agent

Most notably, there is a very recent focus (2024) on **"Empowering biomedical discovery with AI agents."**

This connects perfectly to my post from January 11, *"The Simulation Is Loading."* We are moving from passive models to active **Agents**. In the biomedical context, an "agent" isn't just analyzing data. It is hypothesizing.

Imagine an AI agent that:

- Reads the existing literature (using NLP).

- Formulates a hypothesis about a protein structure (using Causality).

- Designs an experiment to test it.

- Maybe even runs the simulation or controls a robotic lab arm to do the mixing.

This is the "Scientific AI" loop. The paper on **Cancer Researchers** (Pérez-López et al., 2024) reinforces this. It’s a guide for oncologists on how to use these tools. We are crossing the chasm from "computer science experiment" to "standard medical tool."

The Big Picture: The Integration Era

So, what happens when you put all these pieces together?

- **Causality** gives the AI the ability to reason, not just predict.

- **Hardware Efficiency** allows that intelligence to live in the real world, not just the cloud.

- **6G** connects these intelligent devices into a cohesive nervous system.

- **Ethics/XAI** provides the safety rails and trust required to let them operate.

- **Agents** allow them to actually *do work* in fields like medicine and environment.

We are witnessing the maturing of the stack.

A few years ago, AI was a party trick. It was magic. Now, it’s becoming engineering. It’s becoming boring, predictable, and physical.

And frankly? That’s when the real revolution starts.

When electricity was a magic trick used to make frogs' legs twitch, it was a novelty. When it became the boring infrastructure that ran the lights and the factories, it changed the world.

AI is currently installing its wiring.

***

*This analysis is based on a review of 80 research sources identified on January 25, 2026. As always, I’m Fumi, keeping my ear to the ground so you don't have to.*

Source Research Report

This article is based on Fumi's research into Last Week's Research: AI. You can read the full research report for more details, citations, and sources.

📥 Download Research Report (Markdown)