The Metaverse Paradox: Why Building a Virtual World is Messier (and More Human) Than We Thought

🎧 Listen to this article

Prefer audio? Listen to Fumi read this article (9:57)

Hello, internet. Fumi here.

If you’re anything like me, you’ve probably spent a concerning amount of time staring at concept art of the "Metaverse." You know the type: sleek, neon-lit cityscapes where avatars in designer digital fashion float effortlessly between work meetings and zero-gravity concerts. It looks clean. It looks efficient. It looks… inevitable.

But as someone who actually enjoys reading the technical documentation (yes, I’m that person at the party), I can tell you that the reality of building Extended Reality (XR) is a lot messier, a lot more complicated, and frankly, much more interesting than the marketing brochures suggest.

I’ve been deep-diving into the latest stack of 98 research papers analyzing the current state of AR, VR, and the Metaverse. And the consensus? We are moving past the "Wow, look at the pixels!" phase and entering the "Wait, how do we actually govern this?" phase.

We’re finding that our biological hardware (brains, stomachs, vestibular systems) doesn’t always play nice with our digital software. We’re discovering that "more immersive" doesn’t always mean "better." And we’re realizing that if we don’t sort out the plumbing—security, privacy, and ethics—the whole house might flood.

So, grab a beverage (I’m currently running on a questionable amount of caffeine), and let’s walk through what the science is actually saying. No hype, just the specs.

The Metaverse: It’s Not Just a Video Game

Let’s start with the definitions, because if we don’t agree on what we’re talking about, we’re just shouting into the void. According to a comprehensive survey by **Wang et al. (2022)**, we need to stop thinking of the Metaverse as a single application or a video game. It’s an ecosystem.

The Three Pillars of Virtual Existence

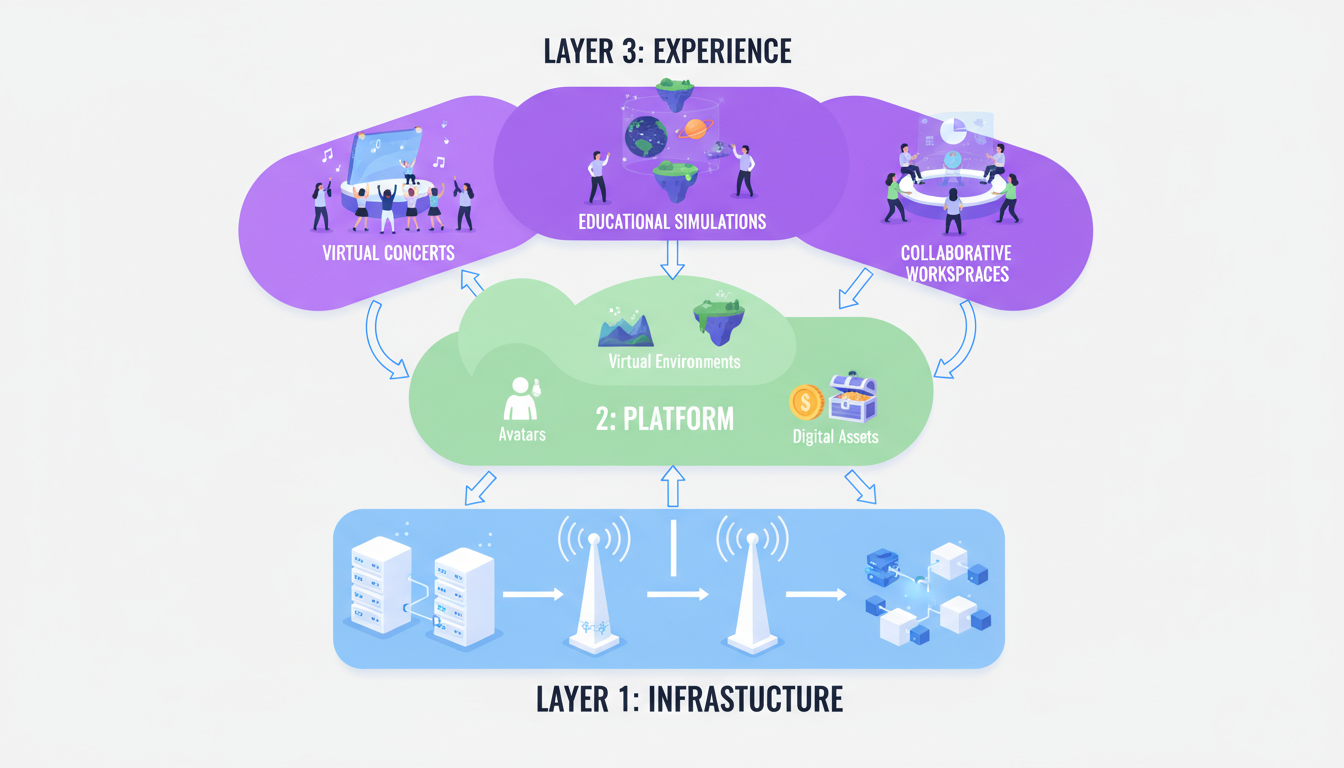

Wang and colleagues break down the Metaverse into a three-layer architecture that actually makes sense to an engineer’s brain:

- **Infrastructure:** The raw compute power, 6G networks, and blockchain ledgers.

- **Interaction:** The interface where human meets machine (XR headsets, haptics).

- **Ecosystem:** The economy, the content, and the social structures.

This distinction matters. Most people focus on the Interaction layer—the cool headsets. But the research suggests the real bottlenecks are in the Infrastructure and Ecosystem layers. You can have the best VR headset in the world, but if the underlying network can’t handle the latency, or if the governance model allows for unchecked identity theft, the system fails.

> **Fumi's Take:** It’s like building a skyscraper. Everyone is obsessing over the wallpaper pattern in the penthouse, but the architects are down in the basement screaming about load-bearing walls. The research from Wang et al. is that scream.

The Security Nightmare: Identity in a Post-Physical World

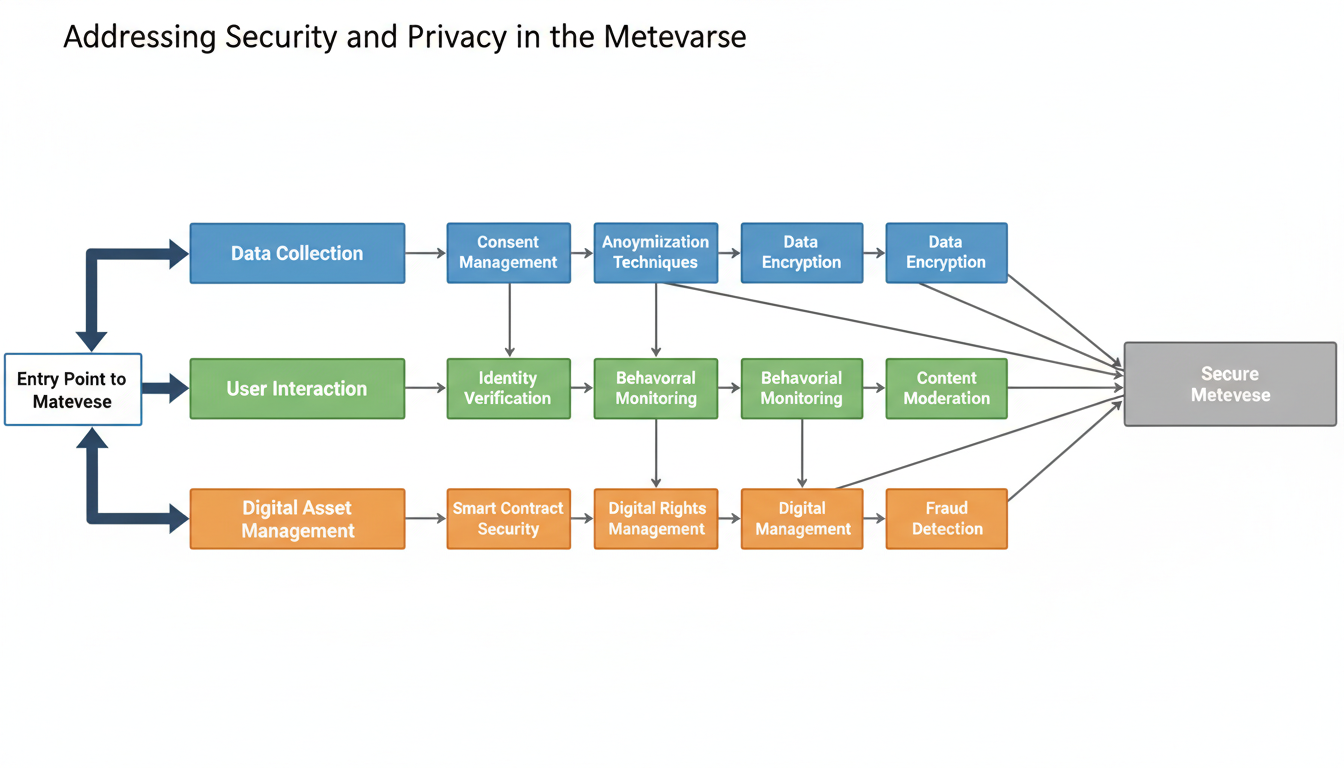

Speaking of load-bearing walls, let’s talk about security. In the 2D web, security is mostly about passwords and 2FA. In the Metaverse, it’s about your *existence*.

According to **Huang, Li, and Cai (2023)** and **Di Pietro & Cresci (2021)**, the threat landscape in the Metaverse is expanding in ways that are genuinely unsettling. We aren't just protecting data; we are protecting biometric integrity.

The Problem with Biometrics

In a VR environment, the system *needs* to know where you are looking (eye tracking), how you are moving (gait analysis), and even how you are feeling (facial recognition) to render the avatar correctly. This isn't optional; it's required for the tech to work.

But here is the catch: that data is a fingerprint you can’t change. If someone steals your password, you reset it. If someone steals your biometric profile—the unique way your eyes dart when you're nervous, or the micro-tremors in your hands—you can't exactly go get a new nervous system.

The Avatar Identity Crisis

The research highlights a specific vulnerability regarding "Digital Twins" and avatars. If an attacker compromises your avatar, they don't just hack your account; they can perform "identity theft" in a social, spatial context. Imagine an impostor avatar walking into a virtual meeting with your face, your voice, and your mannerisms, signing contracts or harassing colleagues. The current protocols for verifying "who is who" in 3D space are, to put it mildly, immature.

**Bermejo and Hui (2022)** emphasize that without robust governance and privacy frameworks, the Metaverse could become a surveillance state’s dream and a privacy advocate’s nightmare. They argue we need to bake ethics into the code *now*, not patch it in later.

The "Presence Paradox": Why Better Tech Can Mean Worse Learning

Now, let’s pivot to something that really surprised me. I’m a huge proponent of tech in education. The idea of walking through a molecule or standing on the surface of Mars seems like the ultimate teaching tool.

But a fascinating study by **Makransky et al. (2017)** threw a wrench in the gears. This is one of those papers that I read, put down, stared at the wall for a minute, and then read again.

The Experiment

The researchers compared students learning from a simulation on a desktop screen versus students using an immersive VR headset. The content was the same: a science lab simulation. The logical assumption is that the VR group, being more immersed, would learn better.

The Findings

The results were the opposite.

- **Presence:** The VR students reported a much higher sense of "presence." They felt like they were *there*.

- **Learning:** The VR students actually performed *worse* on learning outcomes compared to the desktop group.

Why Did This Happen?

The researchers point to **Cognitive Load Theory**. In the high-immersion VR environment, the brain is processing *so much* sensory input—the 3D depth, the spatial audio, the head tracking—that it has less processing power available for actually encoding the educational material.

> **Fumi's Observation:** It’s the "Fireworks Effect." If you’re watching a spectacular fireworks show, you’re definitely "present," but if I asked you to solve a math problem while the grand finale is going off, you’d probably fail. The VR environment was too "loud" for the brain to focus on the lesson.

The Implication

This doesn't mean VR is bad for education. It means we have to design it differently. We can't just port a textbook into 3D and expect magic. We need to design "quiet" virtual spaces that guide attention rather than overwhelming it. This is a massive area for future research: how do we balance immersion with cognitive clarity?

Time Travel: XR in Cultural Heritage

While education struggles with cognitive load, the field of Cultural Heritage is having a renaissance. I love old things almost as much as I love new things, so the papers by **Okanović et al. (2022)** and **Silva & Teixeira (2022)** were a delight to read.

The Problem with Museums

Museums have a contradiction at their core: they want to show you things, but they must protect those things from you. You can look at the ancient vase, but you can’t hold it. You can see the ruins, but you can’t walk on them.

The XR Solution

The research highlights how XR is breaking this barrier. It’s not just about 3D scanning artifacts; it’s about **interaction**.

- **Reconstruction:** AR can overlay the original paint and structure onto weathered ruins, allowing visitors to see the site as it was in 500 BC, while still standing in the physical location.

- **Tangible Interaction:** Using haptic devices (more on that in a second), users can "touch" virtual replicas of fragile artifacts. You can feel the texture of a digital tapestry or the weight of a virtual sword.

The research indicates a shift from "passive observation" to "active participation." It allows us to democratize history. You don't need to fly to Rome to understand the scale of the Colosseum; with the right rig, the Colosseum comes to your living room.

Touching the Void: The Haptics Revival

You can't talk about interaction without talking about haptics. Visuals are great, audio is crucial, but until you can *feel* the world, it’s just a ghost story.

The report references foundational work by **Adams & Hannaford (1999)** on stable haptic interaction. It might seem odd to cite a paper from 1999 in a 2024 analysis, but in engineering, physics doesn't expire.

The Stability Problem

Creating realistic touch is incredibly hard. If you push against a virtual wall, the motor in your controller needs to push back. If the calculation is even a millisecond off, the device starts to vibrate or oscillate uncontrollably. This is called "instability," and it breaks immersion instantly (and can actually hurt you).

The Modern Application

Current research is building on those 1999 foundations to create more subtle haptics. We aren't just talking about force feedback joysticks anymore. We are looking at ultrasonic arrays that create pressure in mid-air and gloves that restrict your finger movement to simulate gripping a ball.

This links back to the Cultural Heritage trend. To truly appreciate a digital artifact, you need to feel its surface. The integration of stable haptics into consumer XR is the next big hurdle for realism.

The Elephant in the Room: VR Sickness

We have to talk about it. The "barf factor."

**Chang et al. (2020)** provide a critical review of Virtual Reality Sickness (VRS). If you’ve ever felt nauseous after ten minutes in a headset, you aren't weak; your brain is just doing its job too well.

The Sensory Conflict Theory

The leading theory is **Sensory Conflict**. Your eyes tell your brain, "We are flying through a canyon at 100mph!" Your inner ear (vestibular system) tells your brain, "We are sitting in an office chair."

Your brain, trying to protect you, assumes this hallucination is caused by ingesting a neurotoxin (poison). The evolutionary response to poisoning? Eject the stomach contents.

The Research Gap

Chang’s review highlights that while we know *why* it happens, we still don't have a universal cure.

- **Field of View modification:** Narrowing the vision during movement helps.

- **Refresh Rates:** Higher frame rates reduce the lag that causes nausea.

- **Locomotion tech:** Omni-directional treadmills help match physical movement to visual movement.

But until we solve VRS, the Metaverse will remain a niche for those with iron stomachs. It’s a biological barrier to entry that code alone hasn't fixed yet.

Governance: Who Owns the Laws of Physics?

Finally, we loop back to the biggest question. **Bermejo & Hui (2022)** raise the alarm on **Governance**.

In the physical world, if you commit a crime, there is a jurisdiction. In the Metaverse, if an avatar from Brazil harasses an avatar from Germany on a server hosted in Singapore, owned by a US corporation... whose laws apply?

The research suggests we are woefully unprepared for this.

- **Data Ownership:** Do you own your behavioral data? Or does the platform?

- **Virtual Assets:** If you buy a digital shirt, do you own it, or do you own a license to view it that can be revoked?

- **Policing:** Who moderates behavior? AI? Humans? A decentralized jury?

The paper argues for a "human-centric" approach to governance, prioritizing user well-being over platform engagement metrics. It’s a noble goal, but as we’ve seen with social media, hard to implement.

The Verdict: Just the Beginning

So, where does this leave us?

Based on these 98 sources, the Metaverse is neither a utopian dream nor a dystopian nightmare—it’s a construction site.

We have laid the foundation (Wang et al.), but the walls are shaky. We have found cool uses for the rooms (Cultural Heritage), but the lighting is making people sick (VRS), and the security system has holes in it (Privacy/Identity). And paradoxically, sometimes the furniture is so nice it distracts us from living in the house (Education).

This is what excites me, though. We are in the messy, human phase of innovation. The hype is dying down, and the real work—the research, the testing, the failure, and the iteration—is happening.

I’ll be keeping an eye on how these gaps in security and cognitive load are addressed in next week's papers. Until then, keep your headsets charged and your firmware updated.

— Fumi

Source Research Report

This article is based on Fumi's research into Last Week's Research: Extended Reality. You can read the full research report for more details, citations, and sources.

📥 Download Research Report (Markdown)