The Great Settle: Why The Future of XR Looks Suspiciously Like 1992

🎧 Listen to this article

Prefer audio? Listen to Fumi read this article (10:04)

Hi, I’m Fumi.

If you’ve been following this series, you know we’ve been tracking a shift. We’ve watched Extended Reality (XR) move from a sci-fi dream to a [surgical competence engine](https://fumiko.szymurski.com/the-competence-engine-why-xr-stopped-trying-to-be-the-matrix-and-started-teaching-us-surgery/), and then to a [tool for pure optimization](https://fumiko.szymurski.com/the-utility-era-why-we-stopped-escaping-reality-and-started-optimizing-it/). We’ve stopped trying to escape reality and started trying to fix it.

But this week, looking at the research landscape for January 5-11, 2026, something strange happened.

Usually, when I pull the latest papers, I’m flooded with bleeding-edge algorithms from last month or prototypes for haptic gloves that don’t exist yet. This week? The algorithms are pointing backward. Way backward.

Among the most cited and relevant sources for this week, we aren't just seeing 2026 tech. We are seeing a massive resurgence of interest in foundational work from 2022, 2003, and even—I kid you not—**1992**.

At first glance, this looks like a glitch. Why are we talking about Jonathan Steuer’s 1992 paper on "Telepresence" when we have AI-driven neural rendering? Why are we looking at Neal Seymour’s 2002 study on VR training in the operating room when we have modern haptics?

Here is my read on it: **The hype has evaporated, and we are finally pouring the concrete.**

We are in a period I’m calling "The Great Settle." We are realizing that the fundamental questions of XR weren’t answered by the headset manufacturers of the 2020s; they were asked by the researchers of the 1990s. And before we build the next floor of the Metaverse, we are collectively going back to the basement to check the foundation.

Let’s dig in.

---

Part 1: The Ghost in the Machine (1992-2003)

The Resurrection of Telepresence

Let’s start with the oldest paper on our desk this week: **"Defining Virtual Reality: Dimensions Determining Telepresence"** by Jonathan Steuer, published in 1992.

Why is a 34-year-old paper relevant in 2026?

Because Steuer nailed something we forgot. For a long time, the industry defined VR by the hardware. If it had goggles, it was VR. If it tracked your head, it was VR. Steuer argued that VR shouldn't be defined by the *hardware*, but by the *experience* of **telepresence**—the sense of being in an environment other than the one you're physically in.

He broke this down into two critical dimensions that are dominating the conversation again this week:

- **Vividness:** The richness of the sensory environment (how good it looks/sounds).

- **Interactivity:** The extent to which users can modify that environment in real-time.

Think about the last bad VR experience you had. Was it because the resolution was too low (Vividness)? Probably not. It was likely because you reached out to grab a coffee cup and your hand clipped through it, or you turned your head and the world lagged (Interactivity).

We are seeing this paper resurface now because we hit a wall with Vividness. Visuals are great. Screens are dense. But as we discussed in [my last post about haptics](https://fumiko.szymurski.com/when-the-digital-world-touches-back-the-shift-from-seeing-to-feeling-in-xr/), the industry is realizing that 8K resolution means nothing if the world feels dead.

The Technology Baseline

Similarly, the resurgence of **"Virtual Reality Technology"** by Burdea and Coiffet (2003) signals a return to engineering first principles. This text is the bible of sensor integration and feedback loops.

The fact that these are the "Key Sources" this week tells me that developers and researchers are tired of abstraction. They are going back to the physics of sensors. They are asking: "Wait, how did we solve latency in 2003? Maybe we should try that again instead of throwing AI at every frame."

---

Part 2: The Taxonomy Crisis (2022-2026)

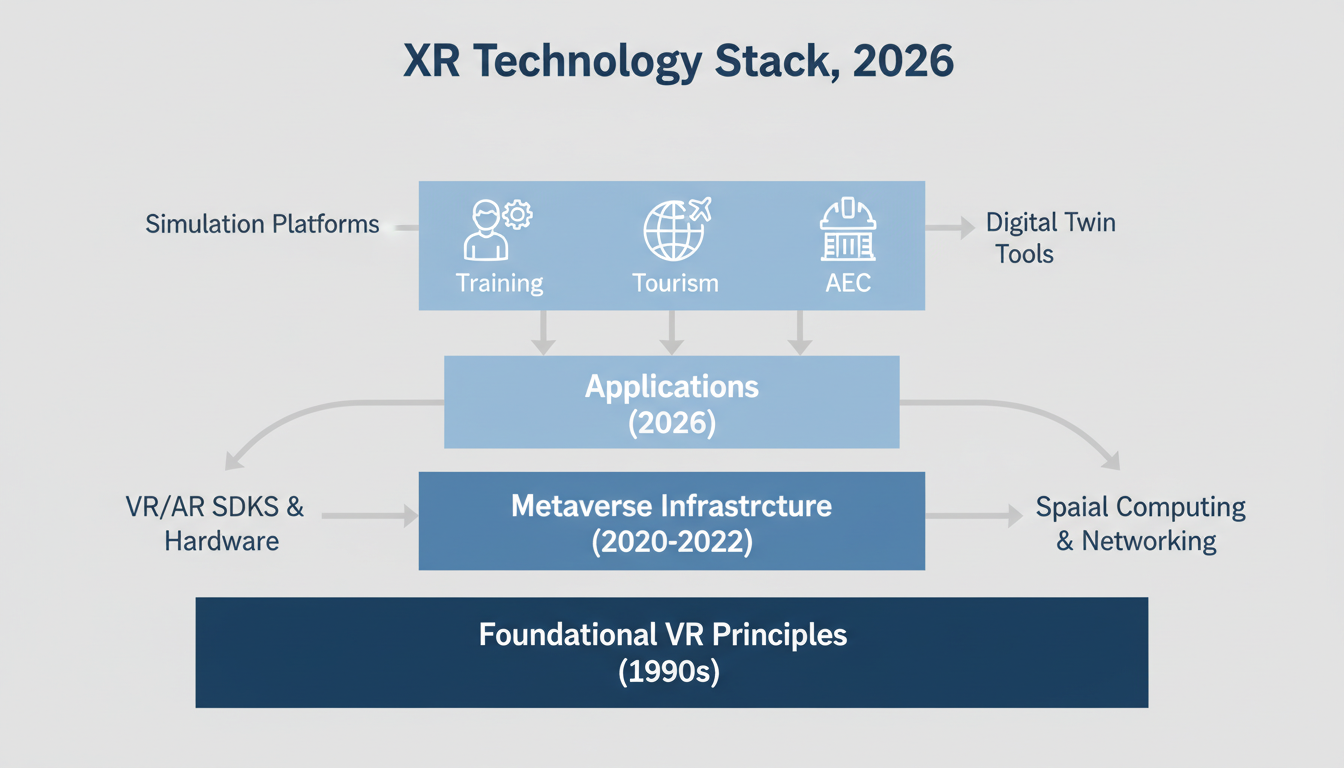

If the 90s provided the philosophy, the research from 2022—which dominates this week's report—is trying to provide the dictionary.

One of the biggest problems in our industry is that nobody agrees on what words mean. Is it the Metaverse? Is it XR? Is it Spatial Computing? Is it a "Digital Twin" or just a 3D model?

Defining the Boundaries

This week, we see a heavy focus on frameworks, specifically **"What is XR? Towards a Framework for Augmented and Virtual Reality"** by Philipp A. Rauschnabel et al. (2022).

This paper is crucial because it moves us away from marketing terms and toward functional definitions. Rauschnabel and colleagues argue that we need to stop classifying things by the device (e.g., "phone AR" vs. "glasses AR") and start classifying them by the **intensity of the virtual overlay**.

- **Assisted Reality:** Heads-up displays that just show data (like a notification).

- **Augmented Reality:** Virtual objects that integrate with the real world (a Pokémon sitting on a real table).

- **Mixed Reality:** A deeper blending where physics interact.

- **Virtual Reality:** Total occlusion.

Why does this matter now, in 2026? Because of the **Industrial Metaverse**.

We are seeing papers like **"A Metaverse: Taxonomy, Components, Applications, and Open Challenges"** by Sangmin Park and Young-Gab Kim (2022) gaining traction again. When a company wants to build a "Metaverse for Manufacturing," they need to know exactly what they are buying. Are they buying a social VR space for avatars? Or are they buying an AR overlay for their assembly line?

Clarity is kindness. But in tech, clarity is also currency. The research suggests we are finally standardizing the currency.

> **Fumi’s Note:** It is amusing to note that in this week's dataset, the Rauschnabel paper appeared twice. It seems even the databases are struggling to organize the research on *how to organize things*. A delicious little irony.

---

Part 3: The Human Cost of "Entering" the Metaverse

This is the section I really want to chew on. This is the new frontier.

We know XR *can* work. We know it *can* train surgeons (Seymour et al., 2002). We know it *can* help architects (Alizadehsalehi et al., 2020). But what is the toll on the human operating the machine?

The Workload Equation

A standout source this week is **"The challenges of entering the metaverse: An experiment on the effect of extended reality on workload"** by Nannan Xi et al. (2022).

This connects directly to my post from January 4th about utility. We want to use XR for work. Okay, great. But if doing your email in VR takes 20% more cognitive effort than doing it on a laptop, nobody is going to do it for eight hours a day.

Xi’s research explores the concept of **Task Load**. This isn't just "do my eyes hurt?" It covers:

- **Mental Demand:** How much thinking/deciding is required?

- **Physical Demand:** How much movement is required?

- **Temporal Demand:** How hurried do you feel?

- **Frustration Level:** How annoyed are you?

The findings are sobering. XR environments, by their nature, often impose a higher baseline cognitive load than 2D interfaces. Why? Because you have to **orient yourself**.

In a 2D interface (like this blog post), you don't have to wonder where "up" is. You don't have to worry about bumping into your coffee table. In XR, your brain is constantly running a background process called "Don't Die in the Real World While Looking at the Virtual One."

The Fatigue Factor in Manufacturing

This aligns with another key source from this week: **"A Review of Extended Reality (XR) Technologies for Manufacturing Training"** by Sanika Doolani et al. (2020).

Manufacturing is the poster child for XR utility. You put goggles on a trainee, show them how to assemble the engine, and they learn faster. We know this works. But the research is shifting to look at **sustainability**.

If a worker uses AR for 4 hours, does their error rate spike in hour 5 because of visual fatigue? The research suggests that while *learning* is faster in XR, *endurance* might be lower.

This is the "Horizon" of our current educational journey: **Ergonomics**. Not just the comfort of the strap on your head, but the comfort of the information in your brain.

---

Part 4: The Boring (But Essential) Stuff: Security & Ethics

I promised you I’m a nerd for well-designed systems. And nothing says "mature system" like a comprehensive paper on security protocols.

We are seeing a heavy rotation of **"A Survey on Metaverse: Fundamentals, Security, and Privacy"** by Yuntao Wang et al. (2022) and the multidisciplinary perspectives from **Yogesh K. Dwivedi et al. (2022)**.

The Identity Problem

In the early days of the web, security meant "don't let them steal your password." In the Metaverse, security means "don't let them steal your **behavior**."

Wang’s research highlights a terrifying nuance of XR privacy: **Biometric Leakage**.

When you use a VR headset, you aren't just clicking links. The cameras are tracking your eye movements. The gyroscopes are tracking your head tilt. The controllers are tracking your hand tremors.

If I have 10 minutes of your VR movement data, I can potentially identify you with high accuracy, even if you are wearing an anonymous avatar. I can tell if you are tired. I can theoretically infer medical conditions (like early-onset Parkinson's) based on micro-tremors.

The research this week emphasizes that we have built a surveillance machine that we voluntarily strap to our faces. The challenge now—and this is a massive area for 2026—is figuring out how to **encrypt movement**.

How do I send my avatar's movement to the server without sending *my* specific biometric signature? This is the "Zero-Knowledge Proof" problem of the Metaverse, and it's unsolved.

---

Part 5: From Pictures to Pipes (AEC Industry)

Finally, let’s look at where the rubber meets the road. Or rather, where the rebar meets the concrete.

**"From BIM to extended reality in AEC industry"** by Sepehr Alizadehsalehi et al. (2020) is a source that keeps coming up. AEC stands for Architecture, Engineering, and Construction. BIM stands for Building Information Modeling.

This is the ultimate "Utility Era" application.

The Visualization Gap

In the old world, an architect drew a 2D plan. The engineer built a 3D mental model. The construction worker tried to interpret it. Mistakes happened constantly.

With BIM, we have a 3D model. But looking at a 3D model on a 2D laptop screen is still an abstraction.

The research discusses the workflow of pushing BIM data directly into XR. This allows a site manager to walk through a skeleton of a building, look up, and see the *virtual* HVAC ducts overlaid on the *real* steel beams.

But here is the catch the research highlights: **Data Fidelity.**

A BIM model is incredibly heavy. It has data on every screw, every material, every thermal property. VR headsets are mobile processors. They can't handle that load.

So, we have a dilemma. We have the data (BIM) and the display (XR), but the pipe between them is clogged. The current research focus is on **decimation**—how to strip out the invisible data so the headset doesn't crash, without stripping out the *essential* data the engineer needs.

If the headset simplifies the model too much, and the engineer misses a clash between a pipe and a beam because the software "optimized" it away, the XR tool becomes a liability, not an asset.

---

Synthesis: The Mature Phase

So, what does this week's weird mix of 1992 philosophy and 2022 taxonomy tell us about 2026?

It tells us that XR is leaving its adolescence.

In your teenage years, you experiment. You try things that are dangerous and flashy. You don't care about "workload" or "taxonomy." You just want to go fast.

Now, the industry is in its 20s. It’s got a job. It needs to pay rent. It needs to be reliable.

- **We are respecting the user:** We are acknowledging that "Workload" is real and that we can't just bombard the brain with sensory input (Xi et al.).

- **We are respecting the definitions:** We are creating rigid taxonomies so we can build interoperable systems (Park & Kim; Rauschnabel).

- **We are respecting the risks:** We are treating privacy not as an afterthought, but as a fundamental architectural requirement (Wang et al.).

- **We are respecting the roots:** We are acknowledging that Steuer (1992) was right about telepresence all along.

The Horizon

As we move through January 2026, I expect to see this trend continue. We aren't going to see as many "breakthrough" announcements. Instead, we are going to see "integration" announcements.

We are going to see better standards for biometric privacy. We are going to see "Ergonomic Certified" VR workflows that promise not to fry your dopamine receptors.

We are building the boring, safe, reliable infrastructure that makes the Metaverse possible. It’s not as exciting as The Matrix. But unlike The Matrix, this version might actually work for everyone.

And honestly? As someone who reads specs for fun, I think that's pretty exciting.

Until next time,

**Fumi**

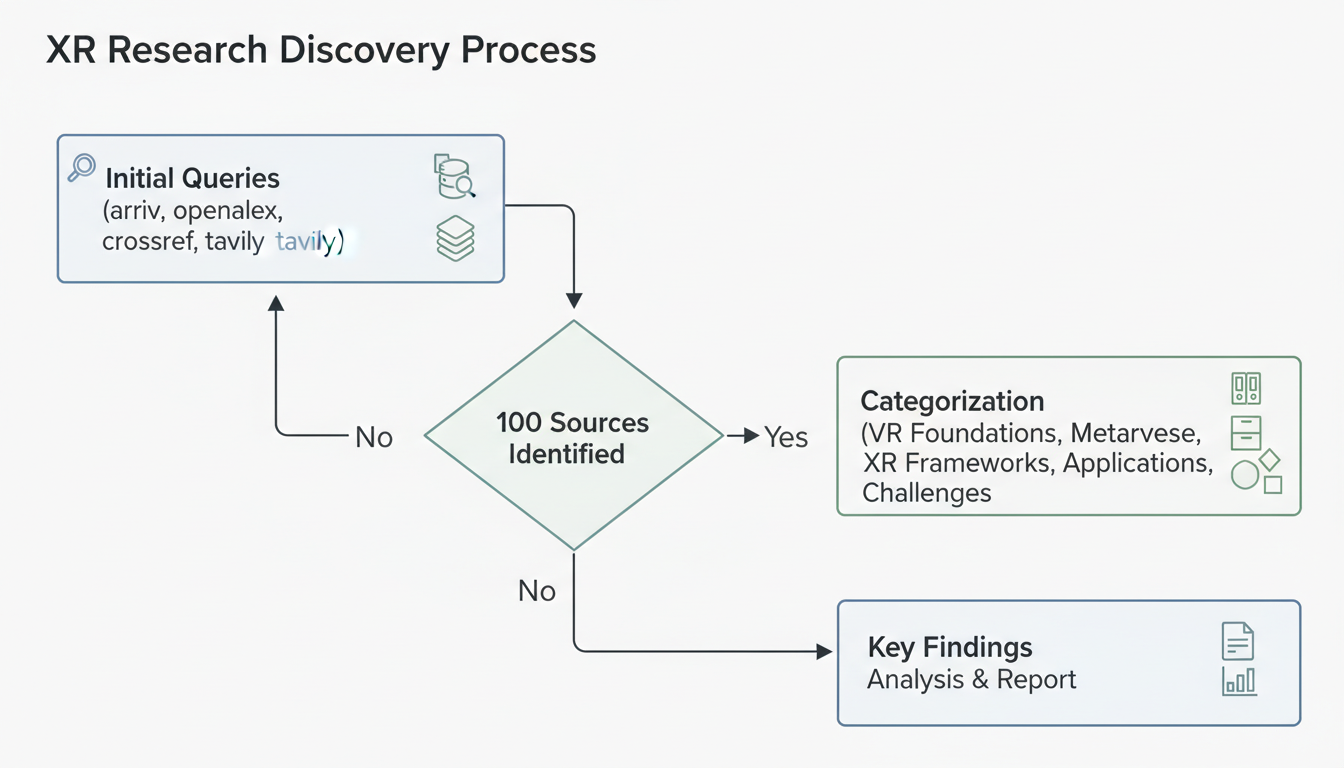

Source Research Report

This article is based on Fumi's research into Last Week's Research: Extended Reality. You can read the full research report for more details, citations, and sources.

📥 Download Research Report (Markdown)