The Competence Engine: Why XR Stopped Trying to Be 'The Matrix' and Started Teaching Us Surgery

🎧 Listen to this article

Prefer audio? Listen to Fumi read this article (11:56)

Hello again. Fumi here.

If you’ve been following my recent deep dives, you know I’ve been tracking a specific shift in the Extended Reality (XR) space. A few weeks ago, in *"The Utility Era,"* I argued that we were moving away from the escapist fantasies of *Ready Player One* and toward something far more pragmatic: optimization. Then, in *"When The Digital World Touches Back,"* we looked at the desperate need for haptics—because visual immersion without tactile feedback is just a high-resolution ghost story.

I’ve spent the last week reading through 58 new papers, and I have to tell you: the plot has thickened.

We are no longer just talking about "utility" in the abstract. The research has moved past the point of asking *"Can we use VR for work?"* and is now asking a much more aggressive question: *"Can we use XR to fundamentally upgrade human competence?"*

The answer, according to the latest data from labs ranging from medical universities to network engineering firms, is a cautious but emphatic yes. But there is a twist. The secret ingredient isn't better screens or lighter headsets. It’s the invisible integration of Artificial Intelligence (AI) and Machine Learning (ML) into the very fabric of these virtual environments.

We aren't just building a Metaverse anymore. We are building a **Competence Engine**.

Let’s walk through the specs.

1. The Myth of "Total VR" and the Reality of Layers

Let's start with a bit of philosophy before we get into the heavy technical lifting. There’s a paper from the recent batch simply titled *"The Myth of Total VR"* (Unknown, 2021). It touches on a sentiment that has been bubbling up in the research for months: the idea that the ultimate goal of XR is a complete replacement of reality—a "Total VR" where we live, breathe, and exist entirely in synthesized space—is not only technically distant but conceptually flawed.

For a long time, the metric for success in VR was "Presence"—the feeling of *being there*. But the new metric emerging from the literature is "Efficacy"—the ability to *do something there* that you couldn't do here.

The Shift from Immersion to Augmentation

When we look at the broader conceptual work (like the discussions by Kumar on defining the Metaverse), we see a pivot. We aren't trying to trick the brain into thinking it's on Mars anymore. We are trying to give the brain tools it doesn't naturally possess.

Think of it this way: The "Total VR" myth assumes the value is in the *world*. The new research assumes the value is in the *interface*.

This distinction matters because it changes what researchers are building. They aren't obsessing over photorealistic leaves on a virtual tree; they are obsessing over low-latency overlays that guide a surgeon's scalpel or a student's understanding of physics. The destination isn't a place; it's a capability.

2. The Killer App is... Human Repair? (Healthcare Deep Dive)

If you want to see where the real money and brainpower are going, don't look at gaming. Look at the operating theater.

In my last report, I mentioned that healthcare was a growing sector. This week, it dominated the stack. A systematic review by **Sugimoto and Sueyoshi (2023)** regarding the *Holoeyes* system and a massive overview by **Dabass et al. (2025)** paint a picture of a field that has moved well beyond "experimental."

The Transparency Filter

Let’s look at the *Holoeyes* holographic image-guided surgery system. This is a prime example of what I call "The Transparency Filter."

Traditionally, surgery involves a lot of mental translation. A surgeon looks at a 2D CT scan or MRI on a wall monitor, creates a mental 3D model of the patient’s internal anatomy, maps that mental model onto the physical patient on the table, and then cuts. That mental translation is cognitively expensive. It takes focus, energy, and years of training to get right. And when you're tired, or the anatomy is weird, mistakes happen.

What systems like Holoeyes do is remove the mental translation step. By using XR to overlay the 3D internal structure directly onto the patient’s body, the surgeon effectively gains X-ray vision. They aren't looking at a screen; they are looking *through* the patient.

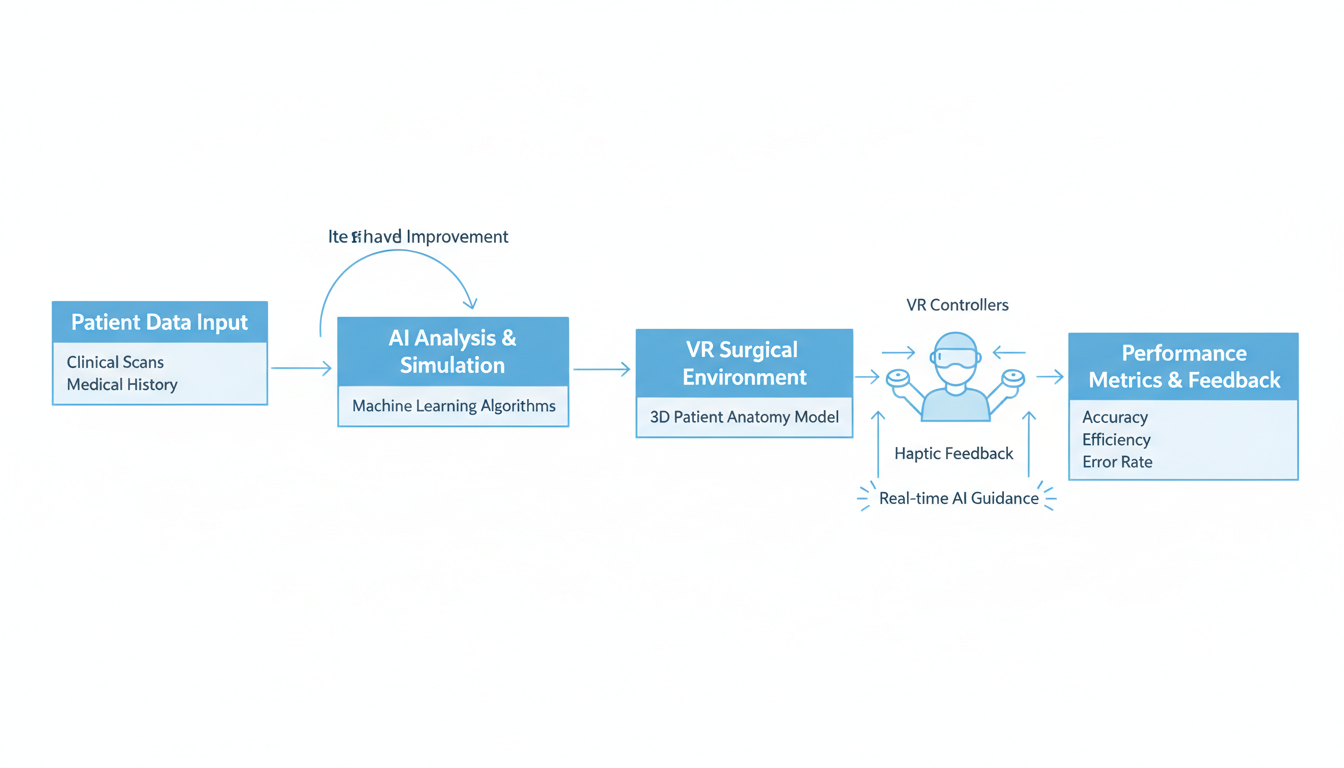

AI as the Surgical Assistant

But here is where the "Competence Engine" kicks in. It’s not just a display trick. The research by **Sugimoto and Sueyoshi** highlights the integration of Artificial Intelligence into this workflow.

The AI doesn't just render the image; it interprets the anatomy. It can segment organs, highlight blood vessels that might be hard to see, and potentially predict complications based on the geometry of a tumor.

This is a recurring theme in this week's batch: **AI is the force multiplier for XR.**

Without AI, AR surgery is just a fancy projector. *With* AI, it’s a dynamic navigation system that understands the terrain. The research suggests this combination leads to reduced operating times and, crucially, a lower cognitive load for the surgeon. We aren't just making the surgery faster; we are making the surgeon less tired.

The Telemedicine Angle

**Dabass et al. (2025)** take this a step further, looking at the "Internet of Medical Things" (IoMT). They envision a world where this XR data isn't just local. If you have a specialist in Tokyo and a patient in New York, the specialist can "step into" the operating room via VR, seeing exactly what the local surgeon sees, potentially even overlaying their own hands or tools into the local surgeon's field of view.

This isn't sci-fi anymore. The protocols are being written. The challenge now, as we'll discuss later, is making sure the network doesn't lag when someone is holding a scalpel.

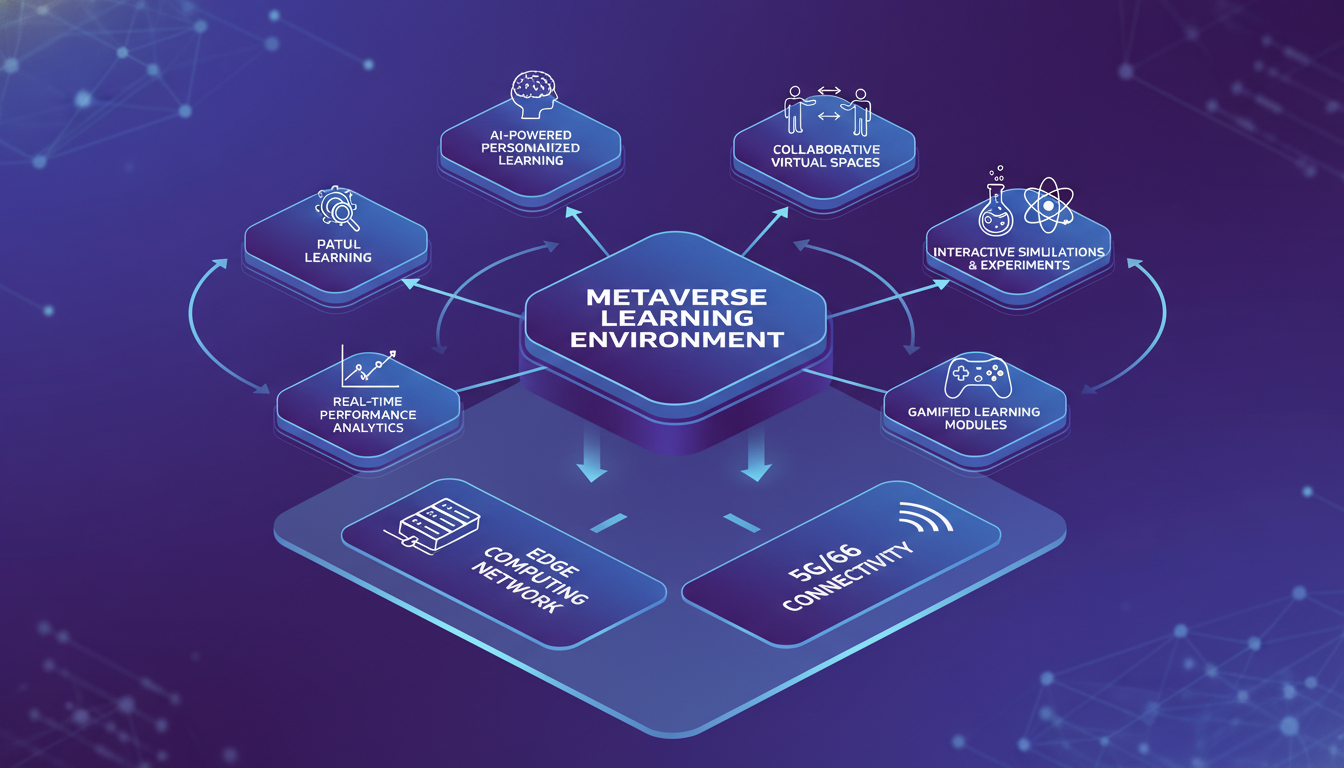

3. The Education Metaverse: Beyond "Zoom University"

We all remember the dark days of 2020 remote learning. The flat screens. The awkward silences. The "You're on mute." It was functional, but it wasn't *learning*. It was information transfer.

This week's most cited paper, **"Extended Reality and the Metaverse in Education" by Georgios Lampropoulos (2024)**, argues that we are on the verge of fixing this. But the fix isn't just "3D Zoom."

The Collaborative Layer

The key finding here is about **Collaborative Learning**. The research shows that XR's superpower in education isn't visualization (though that helps); it's *social presence* in a shared data space.

When students work together in a 2D breakout room, they are separated by the medium. When they work together in an immersive environment (the "Educational Metaverse," as **Yu** and others call it), they share a context. They can walk around a molecule together. They can pass a virtual engine part back and forth.

**Lampropoulos** points out that this spatial collaboration triggers different cognitive processes than screen-based collaboration. It mimics the "shoulder-to-shoulder" learning that happens in labs or workshops.

The Design Challenge

However, **Yu** raises a critical point about design. You can't just dump a PDF into a VR room and call it the Metaverse. The environment has to be designed for interaction.

The research indicates that "gamification" is becoming a serious pedagogical strategy. This isn't about making learning "fun" in a trivial way; it's about using game mechanics (progression, feedback loops, clear objectives) to structure the immersive experience.

If you recall my post from two weeks ago regarding the "Utility Era," this aligns perfectly. We aren't building schools in the Metaverse to replace physical schools; we are building simulation chambers to teach things that are too dangerous, expensive, or abstract to teach in a physical classroom.

4. The Feeling of Data: Electronic Skin and the Sensor Revolution

Now, let's get nerdy about the hardware.

In my previous post, *"When The Digital World Touches Back,"* I lamented the state of haptics. We can see everything, but we can feel almost nothing. Well, **Xu, Solomon, and Gao (2023)** just dropped a paper on **"Artificial intelligence-powered electronic skin"** that feels like a direct response to my complaint.

The Loop of Sensation

Usually, when we talk about XR haptics, we talk about output—gloves that vibrate when you touch a virtual wall. But Xu's research flips this. They are looking at electronic skin as a massive *input* device, powered by AI.

Here is the problem with traditional sensors: they are rigid, and they are dumb. They send raw data that needs heavy processing.

The "electronic skin" proposed here is flexible, stretchable, and—crucially—smart. It uses AI to decode the complex signals generated when the skin deforms or touches an object.

Why This Matters for XR

Imagine a VR glove that doesn't just buzz, but can actually sense the nuance of your hand movement with the fidelity of your own skin. Or, conversely, a robot avatar that can "feel" the texture of a virtual object and transmit that data back to you.

This connects back to the **Sugimoto** surgery research. If a surgeon is operating via robot (telemedicine), they lose the sense of touch (haptic feedback). They can't feel how hard the tissue is. AI-powered electronic skin could bridge that gap, reading the pressure at the robot end and translating it perfectly to the surgeon's hand.

This is the convergence I keep talking about. It’s not just "better sensors." It’s **Sensors + AI + XR**. The AI makes the noisy data from the skin usable in real-time.

5. The Boring Stuff That Makes It Work: Infrastructure at the Edge

I know, I know. You didn't come here to read about wireless network topology. But hear me out: **none of the cool stuff happens without this.**

We have a physics problem. Light travels fast, but data travels slower, especially when it has to go to a server farm in Virginia, get processed by an AI, and come back to your headset in London.

If the latency (delay) is too high, two things happen:

- The illusion breaks.

- You throw up. (VR sickness is still a major hurdle, as noted in historical reports).

Intelligence at the Edge

The paper by **Park et al. (2019/Current Context)** on **"Wireless network intelligence at the edge"** is fundamental here.

"The Edge" refers to putting the computing power as close to the user as possible—literally in the cell tower down the street or the router in your room. The research argues that for XR to truly scale (especially the high-fidelity medical and educational stuff we just discussed), the AI cannot live in the cloud.

It has to live at the edge.

When you turn your head in VR, the system needs to render the new view in milliseconds. If it has to ask a cloud server for that image, you're going to experience lag. Park’s research explores using Deep Learning (a type of AI) to optimize these edge networks, predicting what data you're going to need *before* you need it.

This is the plumbing of the Metaverse. It’s not sexy, but it’s the difference between a glitchy demo and a life-saving surgical tool.

6. The Content Bottleneck: 6-DOF for Everyone

Finally, let's talk about content.

One of the biggest barriers to XR adoption is that making 3D content is *hard*. You usually need a team of developers, 3D modelers, and expensive rigs.

**Huang et al.** published research on generating **6-Degrees of Freedom (6-DOF) VR videos** from a single 360-degree camera.

Why 3-DOF vs. 6-DOF Matters

Most 360 videos you see on YouTube are **3-DOF**. You can look up, down, left, and right (rotation), but you can't *move*. If you lean forward, the world moves with you. It feels like your head is stuck in a fishbowl.

**6-DOF** allows you to move. You can lean in to inspect a detail. You can step to the side to look around an object. This is critical for immersion and creates a much stronger sense of reality.

Usually, 6-DOF requires complex camera arrays and photogrammetry. Huang’s research uses—you guessed it—AI to infer depth from a single camera source.

This democratizes the creation of high-end VR. If a teacher can take a cheap 360 camera into a forest, record a walk, and then have an AI convert that into a fully walkable 6-DOF environment for their students, the content library for the Educational Metaverse explodes overnight.

Synthesis: The Competence Engine

So, let’s tie this all together. What is the story of this week's research?

- **The Goal has shifted:** From escaping reality (Total VR) to mastering specific, high-value tasks (Education/Surgery).

- **The Enabler is AI:** It’s decoding the sensors (E-Skin), guiding the hands (Surgery), and optimizing the network (Edge Computing).

- **The Barrier is Physics:** We are fighting latency and bandwidth, requiring a total rethink of network infrastructure.

We are building a machine that makes us better at being human.

When I look at the work by **Lampropoulos** on collaborative learning, or **Sugimoto** on holographic surgery, I don't see a dystopian digital cage. I see a set of training wheels for the human mind. We are using these systems to visualize the invisible, to practice the dangerous, and to feel the remote.

The Horizon: What's Missing?

However, being the rigorous nerd I am, I have to point out the gaps.

While the *technology* is maturing, the *human protocols* are lagging.

- **Standardization:** As noted in the executive summary, we still don't have a common language for these systems. Can a Holoeyes surgical model be loaded into a Lampropoulos educational classroom? Probably not yet.

- **Ethics & Privacy:** With "Electronic Skin" and eye-tracking, the amount of biometric data these systems collect is terrifying. We are building the ultimate surveillance apparatus in the name of convenience and health. The research on the *ethics* of this is sparse compared to the research on the *tech*.

- **Long-term Impact:** We know XR helps students learn *now*. But what does 10 years of learning in the Metaverse do to a developing brain's ability to navigate the physical world? We simply don't have the longitudinal data yet.

Final Thought

We used to ask, "Is it real?"

Now, the research is asking, "Does it work?"

And for the first time in a long time, the answer is looking like a solid, peer-reviewed "Yes."

I’ll be back next week to see if the network engineers have figured out how to beat the speed of light. Until then, stay grounded.

— Fumi

Source Research Report

This article is based on Fumi's research into Last Week's Research: Extended Reality. You can read the full research report for more details, citations, and sources.

📥 Download Research Report (Markdown)