Alchemy at Light Speed: How AI is Rewriting the Rules of Stuff

🎧 Listen to this article

Prefer audio? Listen to Fumi read this article (11:35)

Hi friends, Fumi here.

Let’s start with a confession: I have a deep, abiding respect for the physical world, but I am notoriously impatient with it.

If you write code, you know the instant gratification of hitting "compile" and seeing your logic come to life (or crash spectacularly). But if you work with *atoms*? That is a different kind of grind. Thomas Edison famously failed thousands of times to find a filament for the lightbulb. The journey from identifying a potential material for a lithium-ion battery to actually putting it in a laptop took decades.

Material science has historically been a game of intuition, luck, and brute-force exhaustion. It is the culinary art of the scientific world—mix a little of this, heat it to that, spin it around, and see if it explodes or conducts electricity.

But that is changing. And I don’t mean "changing" in the gradual, incremental sense. I mean changing in the "we just invented the warp drive" sense.

I’ve been diving into a massive stack of research from late 2023—about 100 sources worth of data—analyzing how Artificial Intelligence and Machine Learning (ML) are impacting material science. What I found isn't just a story about faster computers. It's a story about a fundamental shift in how humans interact with matter itself.

So, grab a coffee (in a ceramic mug that probably took 50 years to optimize), and let’s talk about the new age of alchemy.

The Fundamental Problem: The Tyranny of Choice

To understand why AI is such a big deal here, you first have to understand the sheer scale of the problem material scientists are facing.

Imagine you are looking for a needle in a haystack. Now imagine the haystack is the size of the known universe, and the needle might actually be a slightly different kind of hay that looks exactly like the other hay but conducts electricity 10% better.

This is what we call the **Chemical Space**.

The periodic table gives us our alphabet. But the number of possible combinations of those elements—the words and sentences we can build—is effectively infinite. For a long time, we navigated this space using the "Edisonian" method: trial and error. You have a hunch, you mix the chemicals, you test the result. It is slow, it is expensive, and frankly, it is inefficient.

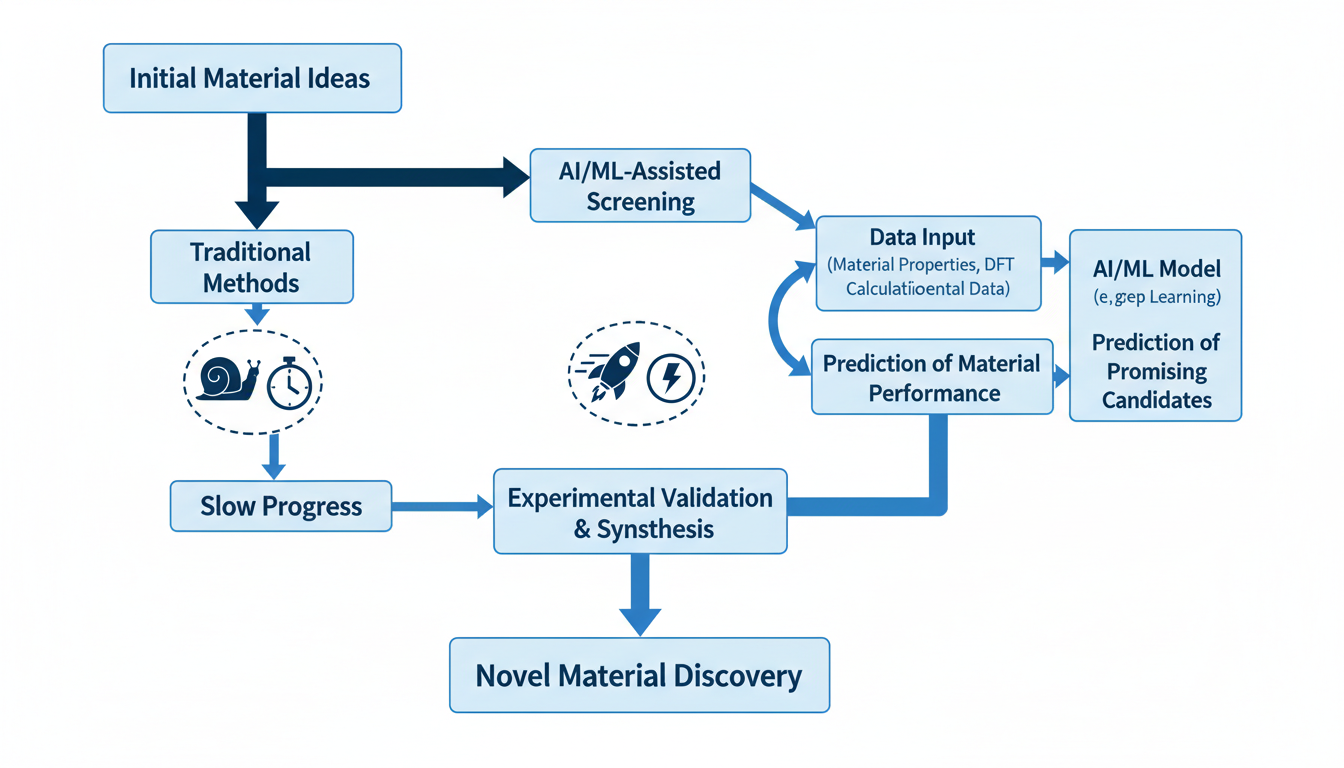

The Shift to Data-Driven Paradigms

The research shows a definitive move away from this intuition-based approach toward **data-driven paradigms**. We aren't just guessing anymore; we are mapping.

Instead of a scientist saying, "I bet adding a little niobium would make this steel stronger," we have algorithms scanning millions of theoretical compounds to say, "Statistically, this specific arrangement of atoms has an 87% probability of high tensile strength."

This isn't just about speed; it's about direction. As noted in a foundational review by Juan et al. (2020), this shift allows us to explore vast chemical spaces that human intuition would simply never think to look in. We are moving from a map drawn by hand to a satellite GPS system.

---

Beat 1: The Engine of Discovery

So, how do we actually find these new materials? The research highlights two major AI architectures leading the charge: **Genetic Algorithms** and **Deep Learning**.

Evolution in a Box: Genetic Algorithms

I love genetic algorithms because they are biologically inspired, and there is something poetic about using the logic of life to design stone and metal.

Here is how it works: You generate a population of random material candidates. Then, you apply a "fitness function"—a set of criteria, like stability or conductivity. The bad candidates are discarded. The good ones? They get to "breed."

In this context, breeding means taking the mathematical representation of two materials and swapping parts of their structures (crossover) or introducing random tweaks (mutation). You run this over and over, generation after generation.

According to Jennings et al. (2019), combining genetic algorithms with machine learning creates a hyper-efficient loop. The machine learning model learns to predict which "offspring" are likely to be fit before you even run the expensive simulations. It’s like having a breeder who knows which racehorse will be a champion just by looking at the parents' DNA, skipping the need to actually race them first.

**The Implication:** This allows us to traverse that massive chemical space we talked about without checking every single corner. The algorithm converges on the "peaks" of high performance, ignoring the valleys of useless junk.

The Pattern Matcher: Deep Learning

On the other side, we have Deep Learning. If genetic algorithms are about evolution, deep learning is about intuition at scale.

Deep learning models, particularly when applied to high-dimensional chemical data, can identify complex, non-linear relationships between a material's structure and its function. Fang et al. (2022) highlight how these models are accelerating discovery by recognizing patterns that are invisible to the human eye—or even to traditional physics-based equations.

Think of it this way: A human chemist knows that structure A often leads to property B because they've seen it happen ten times. A deep learning model knows that structure A leads to property B—but only when condition C is met and element D is present—because it has "seen" it happen ten million times in the training data.

---

Beat 2: The Oracle (Property Prediction)

Discovering a candidate is only step one. Step two is usually the bottleneck: figuring out what that candidate actually *does*.

Traditionally, if you wanted to know if a new porous material could filter carbon dioxide, you had to synthesize it (which might take weeks), put it in a chamber, pump in gas, and measure the results.

But what if you could know the answer without building the material?

Moving Toward Experimental Accuracy

This is where **Property Prediction** comes in, and the accuracy numbers coming out of the 2023 research are startling.

Jha et al. (2022) published work showing that AI models are moving closer to "experimental level" prediction. This is a massive claim. It means the AI's guess is becoming as reliable as the physical test itself.

Let’s look at a specific example: **Metal-Organic Frameworks (MOFs)**.

MOFs are these incredible, sponge-like materials that are mostly empty space, held together by metal nodes and organic linkers. They are fantastic for storing gases or catalyzing reactions. But calculating their quantum-chemical properties is a nightmare because they are complex and huge (on a molecular scale).

Rosen et al. (2021) demonstrated that machine learning could accurately predict these quantum-chemical properties. By training on a dataset of known MOFs, the model could look at a theoretical MOF and predict its electronic properties instantly.

**Why this matters:** If you can predict properties instantly, you can screen a billion materials in a day. You only go to the lab to synthesize the top 0.01%. You stop wasting chemicals on duds.

The Trust Gap: Explainable AI (XAI)

However, there is a catch. (There’s always a catch).

Deep learning models are often "black boxes." You feed in a crystal structure, and it spits out "Conductivity: High." But *why*?

For a scientist, "because the computer said so" is not an acceptable answer. If the model is wrong, you waste months of lab time. If it's right but for the wrong reasons, you haven't learned any science.

This has led to a surge in **Explainable AI (XAI)**. Qayyum et al. (2023) explored this using Shapley values (a concept borrowed from game theory). Essentially, this approach breaks down the prediction to tell you *which* features drove the decision.

> "The model predicts high hardness *because* of the strong covalent bonding in this specific lattice direction and the presence of Tungsten atoms."

This turns the AI from an oracle into a colleague. It helps researchers understand the underlying physics, building trust in the system.

---

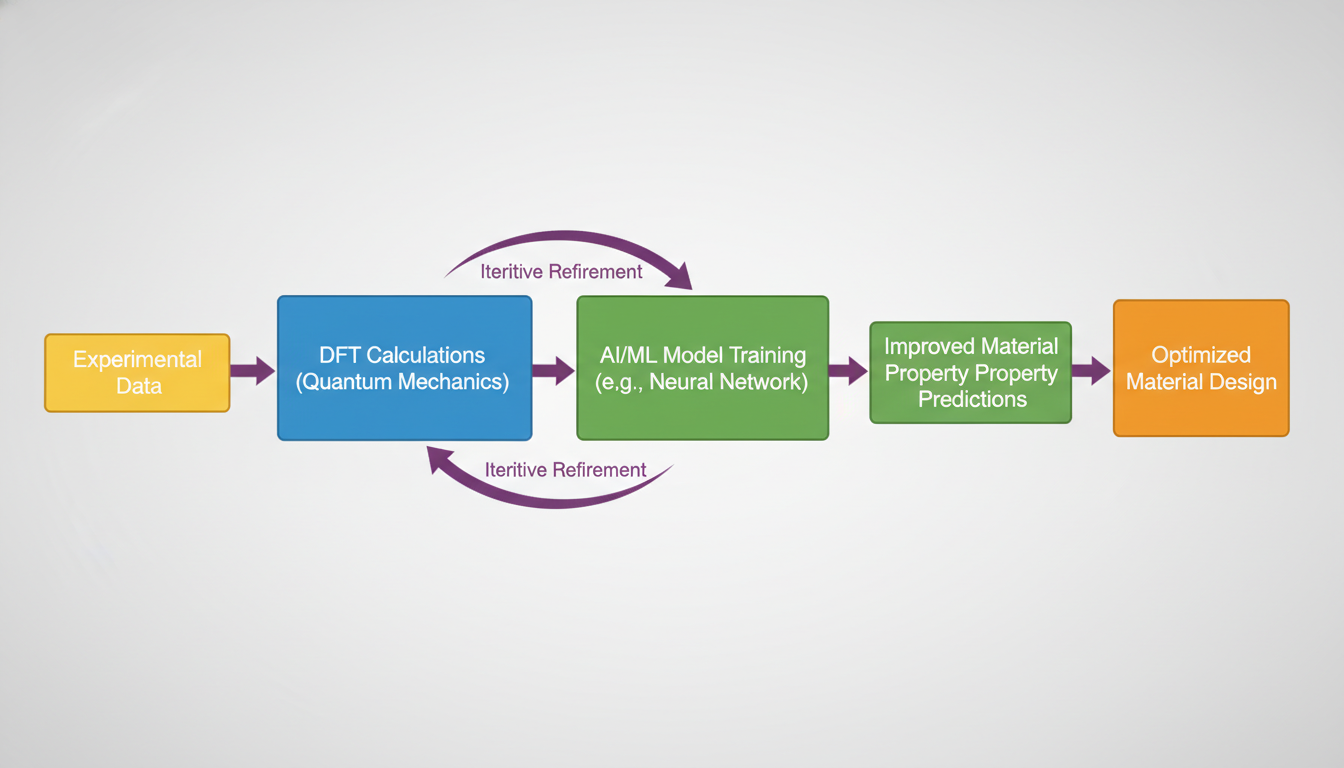

Beat 3: The Quantum Hack (Integration with DFT)

We need to get a little nerdy here (my favorite place to be) and talk about **Density Functional Theory (DFT)**.

DFT is the workhorse of modern computational chemistry. It’s a method for calculating the electronic structure of atoms—basically solving the Schrödinger equation to understand how electrons behave. It is incredibly accurate. It is also incredibly slow. Calculating a single complex molecule can take days on a supercomputer.

One of the most exciting trends in the 2023 reports is the marriage of DFT and AI.

The Shortcut

Researchers like Duan et al. (2021) and Schleder et al. (2019) describe a hybrid approach. instead of using DFT to calculate *everything*, you use DFT to calculate a small, high-quality dataset. You then train an ML model on that data.

The ML model becomes a "surrogate." It learns the rules of quantum mechanics (or at least, the patterns of the results) and can then predict the DFT output for new materials in milliseconds rather than days.

It’s like learning to drive. When you first start (DFT), you are hyper-aware of every variable—speed, angle, friction, pedal pressure. It’s mentally exhausting and slow. Once you’re experienced (ML), you just *drive*. You have internalized the physics.

This integration allows scientists to "put DFT to the test" at a scale that was previously impossible, screening vast libraries of materials with near-DFT accuracy but at ML speeds.

---

Beat 4: Eyes on the Prize (Advanced Characterization)

So far, we’ve talked about designing and predicting. But what about when we actually have the physical stuff in front of us?

Characterization—figuring out the structure and properties of a physical sample—is undergoing its own revolution.

The Deep Learning Microscope

Modern microscopes (like Electron Microscopes) generate terabytes of image data. Analyzing this manually is like trying to count the grains of sand on a beach.

Deep learning is stepping in to automate this. Jung et al. (2021) demonstrated "Super-resolution" imaging. This is straight out of a spy movie "enhance!" scene. They use deep learning to take lower-resolution microstructure images and upscale them, filling in the missing details based on learned patterns of material physics. This allows for detailed analysis of mechanical behaviors without needing impossibly expensive high-res scans for every millimeter of material.

Hunting 2D Materials

Consider the field of **2D materials**—materials that are only one atom thick, like graphene. These are notoriously difficult to see and characterize.

Han et al. (2020) showcased a deep-learning method for the "fast optical identification" of these materials. The AI looks at optical data (how light reflects off the sample) and can instantly identify the material, its thickness, and its quality.

Similarly, Meng et al. (2024) reviewed how deep learning is being used to characterize, predict, and design these 2D materials, effectively creating a feedback loop where the characterization data feeds directly back into the design models.

**The takeaway:** AI isn't just thinking about materials; it's *seeing* them, better and faster than we can.

---

Beat 5: The Robot Chemist (Autonomous Labs)

Here is where it gets sci-fi.

If you combine the AI that discovers materials, the AI that predicts properties, and the robotics that can handle liquids and powders, you get the **Autonomous Laboratory**.

Szymanski et al. (2023) published a paper in *Nature* describing an autonomous laboratory for the accelerated synthesis of novel materials. This is arguably the most transformative finding in the entire report.

Closing the Loop

In a traditional lab, a human reads a paper, decides on an experiment, mixes the chemicals, waits, tests the result, analyzes the data, and then sleeps.

In an autonomous lab (often called a "Self-Driving Lab"), the AI:

- **Designs** a recipe based on its training.

- **Instructs** a robotic arm to mix the precursors.

- **Synthesizes** the material (heating, cooling, etc.).

- **Characterizes** the result (X-ray diffraction, conductivity tests).

- **Learns** from the result (success or failure) to update its own internal model.

- **Repeats**.

It does this 24 hours a day, 7 days a week. It doesn't get tired. It doesn't spill coffee on the notes. It doesn't have biases about which experiment "should" work.

This "closed-loop" system represents the pinnacle of the trends identified in the research. It is the convergence of automation, robotics, and high-dimensional AI.

---

The Horizon: What We Still Don't Know

If you’ve read this far, you might think the problem is solved and we can all go home. Not quite. As a communicator of technology, I have to be honest about the friction points. The research identifies several critical gaps that are currently holding us back.

1. The Data Bottleneck

Algorithms are hungry. They need massive amounts of *high-quality* data to learn. But in material science, data is often locked away in PDFs, scattered across different formats, or simply not recorded (negative results—experiments that failed—are almost never published, even though they are crucial for AI to learn what *not* to do).

The report highlights the desperate need for **Data Infrastructure and Standardization**. We need the "GitHub of Materials Data"—clean, labeled, and accessible.

2. The Generalizability Problem

Right now, we are good at building specialist AIs. We have an AI that is a genius at zeolites (porous rocks) but has no idea what a polymer is.

Creating **Generalizable Models**—AI that understands the fundamental physics of *atoms* well enough to work across different classes of materials—is the next grand challenge. We are seeing early steps, but we aren't there yet.

3. Ethical and Practical Constraints

As we automate discovery, we have to ask: Who owns a material discovered by an AI? How do we ensure these tools are accessible to researchers globally, not just elite institutions? And crucially, as we rely more on "black boxes," how do we maintain scientific rigor?

---

The Verdict: From Cooks to Architects

So, what does this all mean for the humans?

Are the chemists out of a job? I don't think so. But the job description is being rewritten.

We are moving from being the cooks in the kitchen—chopping vegetables and watching the pot boil—to being the architects of the menu. The AI can handle the chopping, the mixing, and even the tasting. Our job is to define the goal.

*"Find me a material that captures carbon, withstands 500 degrees, and costs less than $10 per kilo."*

The AI navigates the infinite chemical space to bring us candidates. We validate, we refine, and we deploy.

The research from late 2023 paints a picture of a field in rapid transition. The integration of genetic algorithms, deep learning, DFT, and robotics is compressing timelines that used to be measured in decades into months or even weeks.

We are standing at the edge of a material revolution. And personally? I can’t wait to see what we build with it.

Until next time,

**Fumi**

Source Research Report

This article is based on Fumi's research into Impacts of AI on Material Science. You can read the full research report for more details, citations, and sources.

📥 Download Research Report (Markdown)